CHAPTER16

Kinds of Research

As you’ve seen in previous chapters, neuroscientific research has made astounding advances in the past 150 years. The development of new research tools and technologies has driven these discoveries, from the first images of individual neurons to revealing the genetic causes of neurological disorders. This chapter introduces you to some of the most important research methods used to understand the brain, including unusual types of microscopy, animal models, and cutting-edge molecular techniques.

TOOLS FOR ANATOMY

Anatomy is the study of structure — most often, the structure of biological organisms. For the brain, anatomy starts with the structure of neurons, which are among the most complex and diverse cell types in our bodies. Scientists were first able to observe neurons in the late 19th century, thanks to histological techniques that start with a very thin slice of brain tissue to which scientists apply stains or other compounds that add contrast or color to specific structures. They then view the tissue with a light microscope, which passes visible light through the thin slice and lenses that make the structures look up to 1,000 times larger than they do with the naked eye.

Anatomy is the study of structure — most often, the structure of biological organisms. For the brain, anatomy starts with the structure of neurons, which are among the most complex and diverse cell types in our bodies. Scientists were first able to observe neurons in the late 19th century, thanks to histological techniques that start with a very thin slice of brain tissue to which scientists apply stains or other compounds that add contrast or color to specific structures. They then view the tissue with a light microscope, which passes visible light through the thin slice and lenses that make the structures look up to 1,000 times larger than they do with the naked eye.

Histology is the study of how cells form tissues. Histological techniques can reveal changes in the density of cell types or the presence of molecules that can suggest a particular disease. These techniques have helped illuminate the brain changes underlying some neurodegenerative disorders. For example, histological methods have shown that an enzyme that breaks down acetylcholine is associated with the brain plaques and tangles of Alzheimer’s disease. And in the brains of Parkinson’s disease patients, histology has revealed the death of neurons that normally control movements through dopamine signaling.

Long after light microscopes gave scientists their first glimpses of neurons, a debate bubbled in the scientific community: Are neurons individual cells or a mesh of physically interconnected cell bodies? Neurons are so densely packed that the answer wasn’t clear until the 1950s, after the development of a new technology called electron microscopy. Electron microscopes can produce useful detailed images of cellular structures magnified many 100,000s of times by directing a beam of electrons through very thin slices of tissue, then enlarging and focusing the image with electromagnetic lenses. With this technology, researchers were finally able to see that neurons are not physically continuous but, instead, are individual cells.

Although they are individual cells, neurons do act in networks, communicating across small gaps called synapses, where the axon terminal of one cell meets a dendrite or cell body of another cell. One method for mapping the signaling pathways within these networks involves injecting radioactive molecules or “tracers” into the cell body of a neuron. Researchers monitor the movement of radioactivity down the neuron’s axon, showing where that neuronal path leads. A similar technique involves tracers that can actually travel across synapses, from one neuron to the next. Scientists have used such tracers to map the complex pathways by which information travels from the eyes to the visual cortex.

Another technique for examining brain anatomy is magnetic resonance imaging, or MRI. Developed in the 1980s, MRI is widely used by researchers and doctors to view a detailed image of brain structure. MRI equipment uses radio waves and strong magnets to create images of the brain based on the distribution of water within its tissues. MRI is harmless and painless to the person being scanned, although it does require sitting or lying in a narrow tube, and the procedure can be quite noisy. With an MRI scan, researchers can tell the difference between the brain’s gray matter and white matter. Gray matter consists of the cell bodies of neurons, as well as their dendrites and synapses. White matter mostly contains axons wrapped in the fatty myelin coating that gives these regions their white color. Based on the distribution of water in the tissues, MRI images clearly differentiate between cerebrospinal fluid, the water-rich cells of gray matter, and fatty white matter.

TOOLS FOR PHYSIOLOGY

Information is conveyed along the neuronal pathways that crisscross through our brains as electrical activity traveling down axons. To study this activity, researchers measure changes in the electrical charge of individual neurons using techniques of electrophysiology. A thin glass electrode is placed inside a neuron to measure the voltage across its cell membrane, which changes when the neuron is activated. This technique can measure neuron activity inside the brains of living lab animals such as rats or mice, enabling scientists to study how neurons transmit electrical information in their normal physical context. Alternatively, a slice of brain can be kept “alive” for a short time in a Petri dish, if the right environment (temperature, pH, ion concentrations, etc.) is provided. In an isolated brain slice, researchers can better identify the exact cell they are recording from and can infuse drugs into the Petri dish to determine their effects on the brain.

Information is conveyed along the neuronal pathways that crisscross through our brains as electrical activity traveling down axons. To study this activity, researchers measure changes in the electrical charge of individual neurons using techniques of electrophysiology. A thin glass electrode is placed inside a neuron to measure the voltage across its cell membrane, which changes when the neuron is activated. This technique can measure neuron activity inside the brains of living lab animals such as rats or mice, enabling scientists to study how neurons transmit electrical information in their normal physical context. Alternatively, a slice of brain can be kept “alive” for a short time in a Petri dish, if the right environment (temperature, pH, ion concentrations, etc.) is provided. In an isolated brain slice, researchers can better identify the exact cell they are recording from and can infuse drugs into the Petri dish to determine their effects on the brain.

Using these methods, scientists have made critical discoveries about synaptic plasticity — the capacity of a synapse to become stronger or weaker in response to sensory inputs or other activity. For example, repeatedly stimulating a neuron by training an animal in a particular task, or by direct electrical stimulation, increases the synaptic strength and the chance that the downstream neuron will react to the incoming signal.

A disadvantage of electrophysiology, as described above, is that the techniques are highly invasive. However, another method, called electroencephalography or EEG, is able to record human brain activity without invasive or harmful procedures. In EEG, about 20 thin metal discs are placed on the scalp. Each disk is connected by thin wires to a machine that records the activity of neurons near the brain surface. This approach has been especially useful for understanding epilepsy and the stages of sleep. However, it does not provide information at the level of individual neurons.

Researchers who need to look at individual neurons in a living brain can use a technique called two-photon microscopy. A lab animal such as a fly or mouse must be genetically modified so that some of its neurons produce a protein that glows when a laser beam shines on them. Two-photon microscopy has enabled scientists to understand changes in the brain during normal processes like learning, as well as changes that occur over the course of a disease — for example, watching how the branches on neurons near Alzheimer’s-like plaques break down over time.

TOOLS FOR GENETICS

The human genome is made up of 3 billion pairs of DNA letters or “bases.” This multitude of adenine (A), cytosine (C), guanine (G), and thymine (T) bases comprises an estimated 20,000 genes that spell out instructions for making proteins, along with regulatory and other non-coding DNA regions whose functions are not fully known. Scientists study genetics in many ways, such as following diseases or other traits through family pedigrees or identifying the exact order of DNA bases (the DNA “sequence”) that code for a given trait. More recent genetic tools enable scientists to manipulate genes and other genetic features to better understand how the brain works and how to treat it in cases of dysfunction or disease.

The human genome is made up of 3 billion pairs of DNA letters or “bases.” This multitude of adenine (A), cytosine (C), guanine (G), and thymine (T) bases comprises an estimated 20,000 genes that spell out instructions for making proteins, along with regulatory and other non-coding DNA regions whose functions are not fully known. Scientists study genetics in many ways, such as following diseases or other traits through family pedigrees or identifying the exact order of DNA bases (the DNA “sequence”) that code for a given trait. More recent genetic tools enable scientists to manipulate genes and other genetic features to better understand how the brain works and how to treat it in cases of dysfunction or disease.

Scientists often don’t know which gene or other DNA feature controls a trait. At the outset, a particular trait could be encoded on any of the 23 pairs of chromosomes in a typical human cell. But with genetic linkage studies, researchers have begun to map gene locations. First, researchers must identify another trait with a known chromosomal location that tends to be inherited with or “linked” to the trait of interest. This technique, which narrows down the likely location of the gene of interest, was the first step toward identifying the genetic basis of many neurological disorders.

When you think about mutations, you probably think of harmful changes in one or several DNA bases within a gene. But some disorders result from an overabundance of copies or repeats of a stretch of DNA. This is the case with Huntington’s disease. The normal HTT gene has about a dozen repeats of a small stretch of DNA within the gene, but Huntington’s patients can have more than 100 of these repeats. Researchers now use DNA chips or microarrays to identify such variations in copy number. The “array” of a microarray refers to the thousands of spots arrayed in rows and columns on the surface of the chip; each spot contains a known DNA sequence or gene, which can grab onto corresponding bits of the genome being analyzed. Using this tool, scientists are able to compare DNA samples of two people, perhaps one healthy and one with a disorder, to see if certain pieces of DNA are repeated more in one person than in the other. Another type of microarray helps researchers determine if a patient has a chromosomal translocation — a chunk of a chromosome that has been misplaced onto another chromosome.

Recent years have seen great advances in DNA sequencing methods, allowing researchers to more efficiently and affordably explore the exact DNA sequence that might underlie brain disorders. In the early 2000s, the Human Genome Project made public the vast majority of the human genome sequence; in the years that followed, the science of genomics has enhanced scientists’ understanding of brain function at the level of genes, cells, and circuits. Genomics can help identify genetic variations that cause conditions ranging from depression to schizophrenia to movement disorders.

Genetics research now goes far beyond reading the sequence of bases in the genome. In the last few years, scientists have harnessed a molecular tool that can edit the genome more precisely and efficiently than was previously possible. This tool, called CRISPR (which stands for Clustered Regularly Interspaced Short Palindromic Repeats), evolved as a bacterial immune system that targets viral invaders. Scientists have harnessed CRISPR’s components to home in on specific DNA sequences in lab animals and human cell cultures. By tethering DNA-cutting enzymes to this targeting system, scientists can recreate mutations found in patients with neurological disorders, or even insert new bits of DNA to test their effect. With CRISPR, scientists have been able to mimick Alzheimer’s in rodents, in order to study the disease and its potential treatments. CRISPR is also used to study mutated human neurons in Petri dishes. Researchers can observe how mutations that cause autism, Parkinson’s disease, or other conditions affect neuronal growth and function.

Optogenetics is another fascinating intersection of genetic tools with brain science. This ingenious technique allows researchers to control brain activity with flashes of light. Scientists genetically modify a lab animal like a mouse so that its neurons produce a light-responsive protein. Then, optical fibers are inserted into the brain to allow light to shine on those neurons — either activating or silencing them. Optogenetics has helped scientists better understand how neurons work together in circuits. This technique has also been used to control animal behaviors ranging from sleep to drug addiction.

Oddly enough, genetics is not always about genes. As mentioned above, much of the human genome contains DNA sequences that are not genes, whose job is to regulate gene activity. These regulatory sequences, and the enzymes that make changes to them, help determine under what conditions (in what cells, at what age, etc.) a gene is expressed or repressed. These epigenetic changes occur in cells when chemical tags are placed on the regulatory regions of certain genes; the tags influence whether those genes will be turned on or off. In the past decade, epigenetics research has begun to clarify the role of gene regulation in brain development and learning. Epigenetics has also revealed how mutations in the regulatory regions of DNA can cause disease, just as mutations can in genes.

Genetics in Neurological Diseases

The impact of mutations varies from person to person, and from disease to disease. A particular mutation might explain some cases of a disorder but not others, or it could be only one of several genetic changes affecting a patient. Lissencephaly is a brain malformation in which the surface of the brain is smooth, unlike normal brains whose surfaces have ridges and grooves. It affects development. Babies with lissencephaly start having spasms in the first months of life and develop drug-resistant epilepsy and severe intellectual and motor disabilities. Although about 70 percent of these patients have mutations in the LIS1 gene, at least two other mutations have been associated with the condition. Another complex genetic condition, Kabuki syndrome, is marked by intellectual disabilities, a distinctive facial appearance, slow growth in infancy, and other physical problems. Kabuki syndrome is hard to diagnose because some symptoms, such as intellectual disability, range from mild to severe. DNA sequencing has found that most patients, but not all, have mutations in the KMT2D gene — and some patients carry the mutations in only some of their cells. In addition, people with Kabuki syndrome may have mutations in other genes that function like KMT2D.

It is also possible for a person to carry a mutation but exhibit no outward signs. Fragile X syndrome, the most common form of congenital intellectual disability in males, is caused by an excessive number of DNA sequence (CGG) repeats within the FMR1 gene. The protein product of the FMR1 gene, which is important for synapse function, is disrupted by these repeats. While some people with elevated numbers of the sequence repeats may not be affected, they are carriers with a risk of passing it on to their children.

TOOLS FOR BEHAVIOR

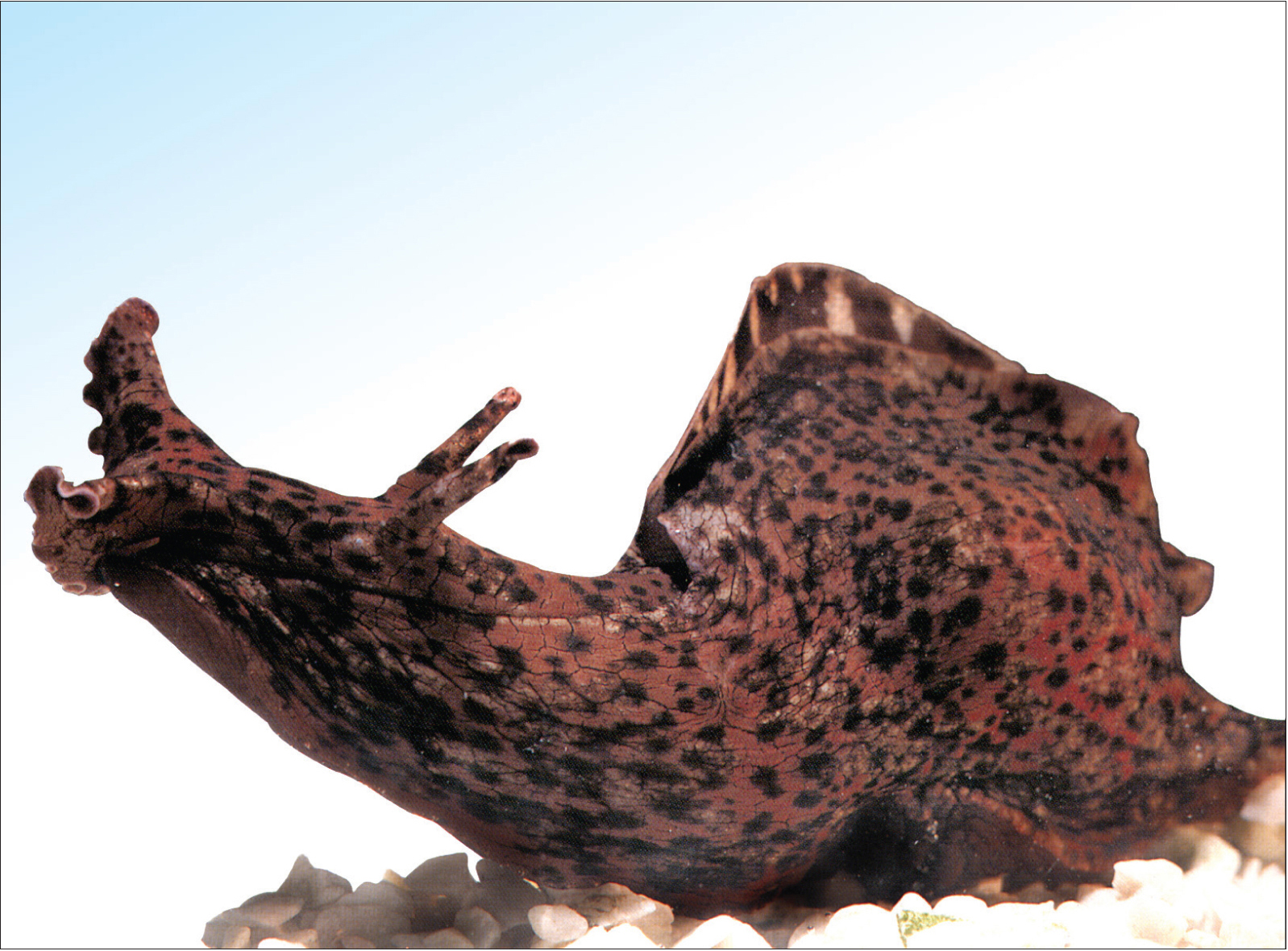

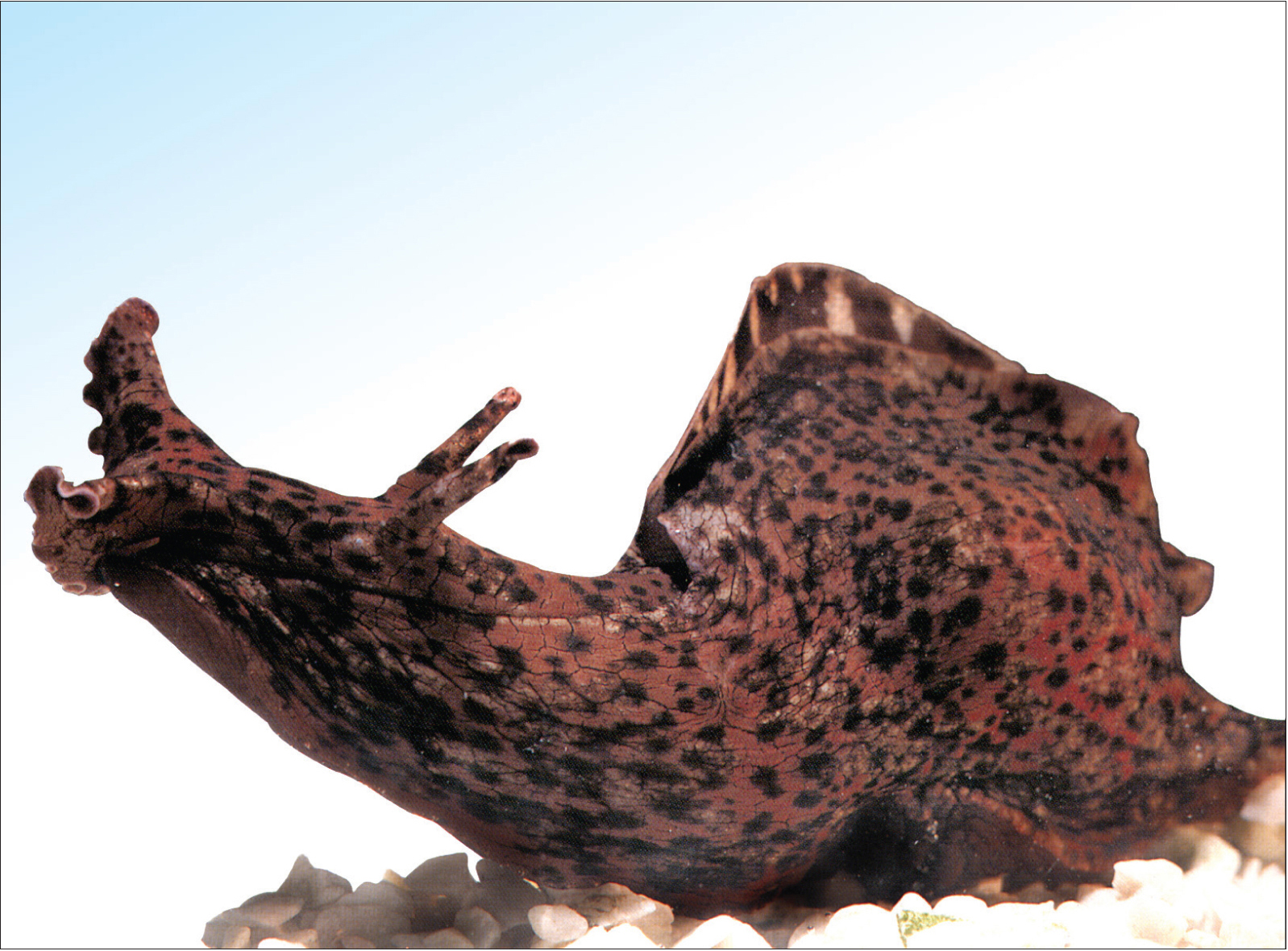

To understand how brain function drives behaviors in humans, researchers often turn to animal models. An eight-inch long marine slug may not look like a very promising model of brain function but, over the years, the animal known as Aplysia has helped scientists uncover many principles of learning and memory. Aplysia has relatively few neurons (around 10,000, compared to approximately 86 billion in humans), but some of its neurons are large enough to be seen with the naked eye. Aplysia also exhibits simple behaviors that can be modified with training. For example, Aplysia will reflexively withdraw its gill after receiving an electric shock to its tail. It can be trained to withdraw its gill in response to an innocuous touch which, during training, was paired with an electric shock. Scientists have discovered how the timing of training sessions affects learning, and have identified proteins and other molecules that strengthen synapses so the neuronal response is greater the next time Aplysia is stimulated. Many of the molecules and processes identified in Aplysia’s learning are also involved in human learning.

To understand how brain function drives behaviors in humans, researchers often turn to animal models. An eight-inch long marine slug may not look like a very promising model of brain function but, over the years, the animal known as Aplysia has helped scientists uncover many principles of learning and memory. Aplysia has relatively few neurons (around 10,000, compared to approximately 86 billion in humans), but some of its neurons are large enough to be seen with the naked eye. Aplysia also exhibits simple behaviors that can be modified with training. For example, Aplysia will reflexively withdraw its gill after receiving an electric shock to its tail. It can be trained to withdraw its gill in response to an innocuous touch which, during training, was paired with an electric shock. Scientists have discovered how the timing of training sessions affects learning, and have identified proteins and other molecules that strengthen synapses so the neuronal response is greater the next time Aplysia is stimulated. Many of the molecules and processes identified in Aplysia’s learning are also involved in human learning.

The fruit fly Drosophila is also commonly used to study behavior, especially how genes control behavior. For example, variations in a gene called ‘foraging’ determine whether flies tend to roam around as they eat or sit in one place. Flies with mutations in another gene called ‘timeless’ don’t have normal circadian rhythms. Mutations have been identified that affect the full gamut of Drosophila behaviors — from aggression to courtship, as well as learning and memory. Many of the affected genes have correlates in humans.

Addiction presents one of the most pressing challenges in studying human behavior — how to better understand it and how to treat it. Some lab animals like rats will consume alcohol and drugs even if accompanied by a bitter taste or foot shock. Scientists have uncovered changes in the brains of animals exhibiting such addiction-like behaviors that mirror changes seen in the brains of humans with addiction disorders. Interestingly, some breeds of rats are very likely to exhibit addiction and relapse behaviors while others are more resistant. By comparing the genetics of two breeds of rats with different predispositions to cocaine addiction, scientists identified genes that were differentially turned on or off in the two breeds; the study suggests that these genes, and their epigenetic regulation, play a role in susceptibility to addiction. This type of research helps scientists understand why some people are more prone to addiction or relapse, and could suggest ways to identify people at risk.

National Human Genome Resource Institute.

Because its nervous system is much simpler than that of a mammal, the sea slug Aplysia was an important animal model in early studies on the neurobiology learning and memory.

Paulomelo.adv.

Drosophila melanogaster, pictured here, is widely used for studying many aspects of the brain and behavior.

Behavior is also studied directly in humans. Early mapping of human behaviors to specific brain regions was done by observing personality changes in people who had lost small regions of their brain due to injuries or surgeries. For example, people who have lost their frontal lobe often become inconsiderate and impulsive. Modern imaging techniques, described in greater detail below, also help scientists to pair brain regions with certain behaviors. For example, imaging allows researchers to see certain brain areas “light up” when a person is shown human faces, but not when they see faces of other animals. These techniques are also useful to better understand brain disorders — such as identifying brain regions responsible for auditory hallucinations in schizophrenia.

TOOLS FOR BIOCHEMISTRY

Although we talk a lot about the electrical signals transmitted along neurons, the brain also communicates with molecular and chemical signals. Neurotransmitters are chemical messengers that travel across a synapse, carrying signals from one neuron to the next. Using a method called microdialysis, researchers can monitor neurotransmitters in action. With thin tubes inserted into the brain, scientists are able to collect tiny volumes of liquid from just outside neurons and then analyze the compounds in that liquid. For example, a researcher could analyze liquid captured during learning to identify molecules that are important for that process.

Although we talk a lot about the electrical signals transmitted along neurons, the brain also communicates with molecular and chemical signals. Neurotransmitters are chemical messengers that travel across a synapse, carrying signals from one neuron to the next. Using a method called microdialysis, researchers can monitor neurotransmitters in action. With thin tubes inserted into the brain, scientists are able to collect tiny volumes of liquid from just outside neurons and then analyze the compounds in that liquid. For example, a researcher could analyze liquid captured during learning to identify molecules that are important for that process.

Microdialysis can also be used to deliver compounds to the brain. Many drugs have powerful effects on the brain, so scientists can use these substances to tweak brain function in order to understand it better. Pharmacology, the study of the effects of drugs, is also dedicated to identifying new drugs to treat conditions like pain or psychiatric illness, as well as understanding addiction and other negative consequences of drug use.

Another important method employed to study the molecules and chemicals at work in the brain is mass spectrometry. Once a sample has been collected — perhaps by using microdialysis — the compounds it contains are ionized (given an electric charge) and then sent through an electric or magnetic field. The behavior of each molecule in that field indicates its mass. That information alone provides valuable clues for identifying a molecule. Mass spectrometry has also been very useful in exploring neurodegenerative disorders. For example, one treatment for Parkinson’s disease causes severe side effects, including involuntary movements. With mass spectrometry, researchers have identified the location within the brain where this side effect is caused; that information could point the way to interventions that can reduce or prevent those side effects.

Rama.

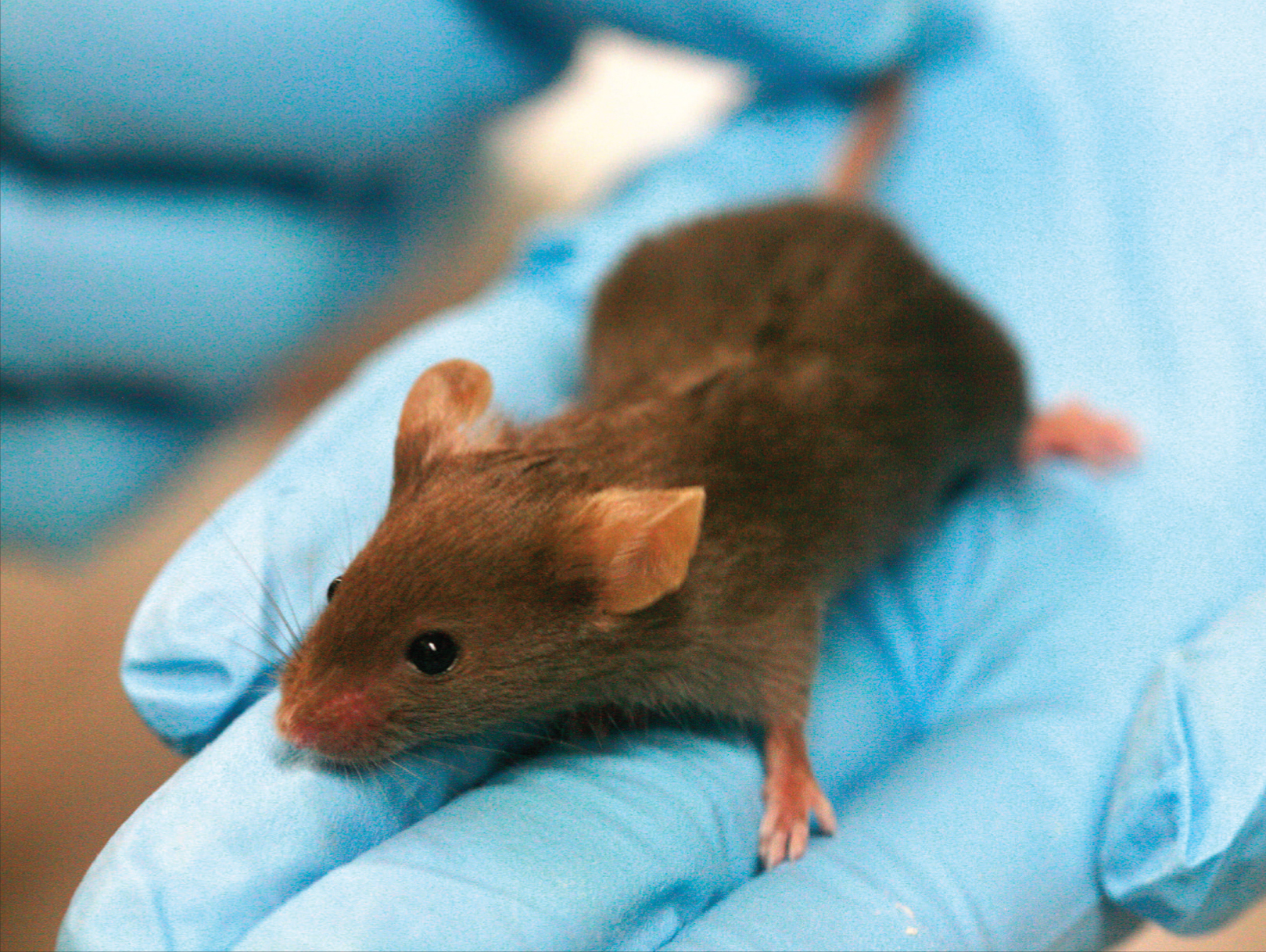

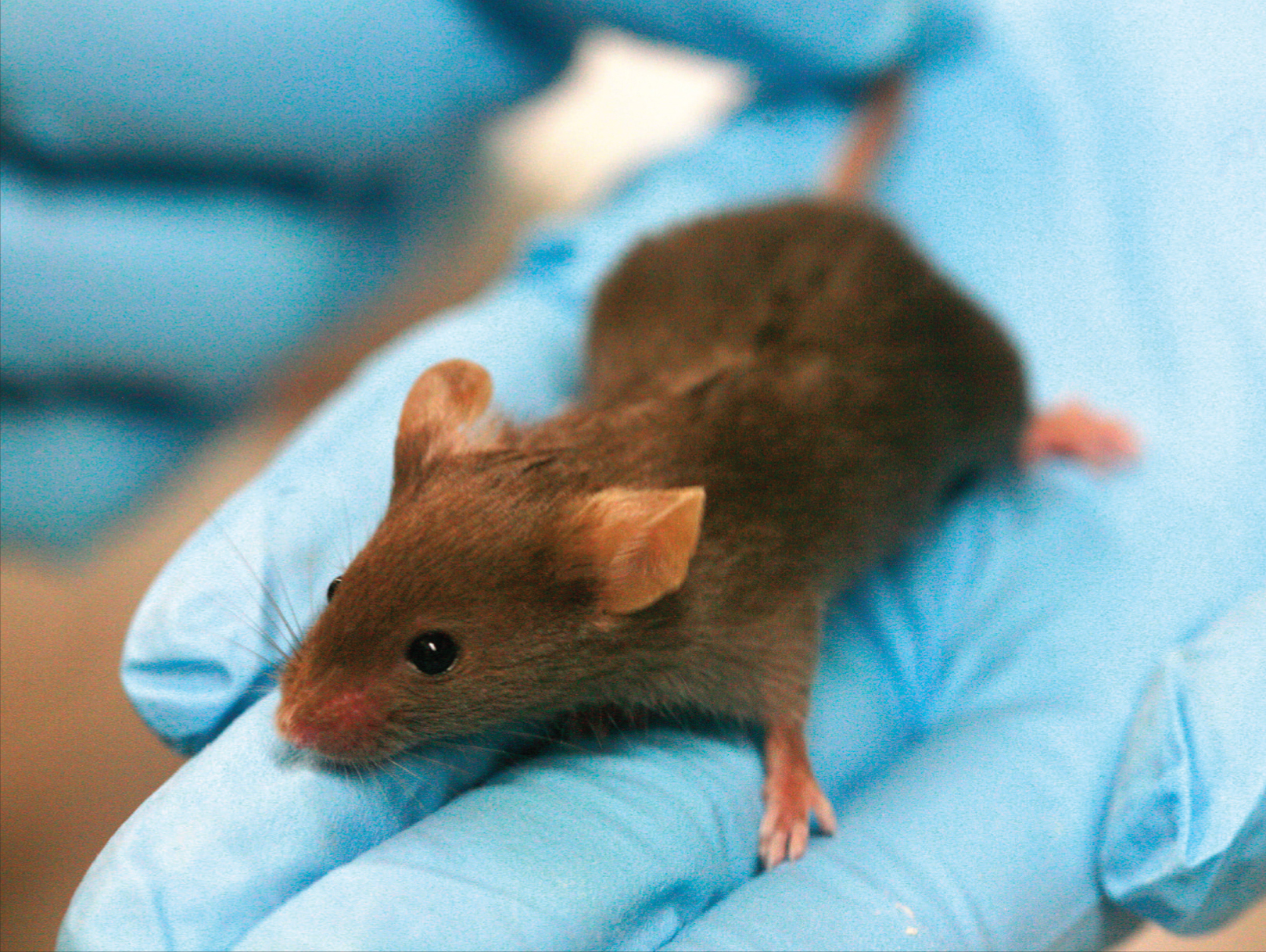

Mice are one of the most important animal models in neuroscience research.

iStock.com/nattrass.

Magnetic resonance imaging (MRI) scans are used to create detailed images of organs like the brain.

TOOLS USED FOR HUMAN RESEARCH

Many of the methods we have discussed are too invasive to use in humans. But several methods for imaging human brain function do not require holes in the skull or other lasting physical changes. Functional MRI (fMRI) can be used to follow changes in the brain activity of a person lying inside an MRI scanner. The machine is tuned so that it detects blood flow as well as differences in oxygen-rich and oxygen-poor blood, based on the idea that more active regions of the brain need more oxygen and nutrients, which are supplied by fresh oxygenated blood. While this is an indirect indication of neuron activity, it can pinpoint brain activity to fairly small regions.

Many of the methods we have discussed are too invasive to use in humans. But several methods for imaging human brain function do not require holes in the skull or other lasting physical changes. Functional MRI (fMRI) can be used to follow changes in the brain activity of a person lying inside an MRI scanner. The machine is tuned so that it detects blood flow as well as differences in oxygen-rich and oxygen-poor blood, based on the idea that more active regions of the brain need more oxygen and nutrients, which are supplied by fresh oxygenated blood. While this is an indirect indication of neuron activity, it can pinpoint brain activity to fairly small regions.

fMRI provides an indirect view of neuron activity, but magnetoencephalography (MEG) detects actual electrical currents coursing through groups of neurons. When neuron activities are synchronized, their combined electrical currents produce weak magnetic fields that MEG equipment can detect. A person undergoing the procedure sits or lies down, with his or her head surrounded by a helmet-shaped device that can sense magnetic fields. MEG has been useful in a variety of studies: from how the auditory cortex responds to sounds to identifying where epileptic seizures start in a patient’s brain. MEG is useful for detecting rapid changes in brain activity (temporal resolution) but it does not provide the precise location of that activity (spatial resolution). For this reason, researchers can combine MEG data with fMRI data to obtain good anatomical detail from fMRI and high-speed readings of brain activity from MEG.

Near-infrared spectroscopy (NIRS) is similar to fMRI in that it monitors the flow of oxygenated blood as a way to estimate neuron activity. A major difference is that NIRS is only useful for measuring activity near the surface of the brain and does not provide as much detail; however, it is far less expensive and cumbersome than fMRI. NIRS is also more comfortable for the person undergoing the procedure, as the setup essentially involves wearing a cap with wiring hooked to it. Some of the wires transmit harmless laser beams (~1 megawatt of power or less) into the brain while others detect the light after it travels through the brain. NIRS can be used to determine the extent of brain injuries and to monitor oxygen levels in the brains of patients under anesthesia. Because of its portability, NIRS is very useful for studying brain activity during tasks — such as driving down the highway — that can’t take place inside an fMRI scanner.

Positron emission tomography (PET) detects short-lived radioactive compounds that have been injected into the bloodstream. The radioactive compounds could be oxygen or glucose, or they might be a neurotransmitter. PET traces where these compounds go in the body. The location of labeled oxygen can indicate blood flow, while a labeled neurotransmitter can show which brain regions are using that signaling molecule. PET can also detect the amyloid plaques that are a hallmark of Alzheimer’s disease; this technique could one day enable us to identify the disease in its early stages. Although PET has good temporal resolution like MEG, it lacks the detailed spatial resolution of MRI.

Some methods used in human research can change brain activity. In transcranial magnetic stimulation (TMS), a coil that generates a magnetic field is placed near a person’s head. The magnetic field can penetrate the skull, temporarily activating or silencing a region of the cortex. TMS is used to treat psychiatric disorders such as anxiety, depression, and post-traumatic stress disorder and could be an effective option for patients with conditions that do not respond to medications.

Although neuroscience has progressed by leaps and bounds since neurons were first viewed under a microscope, many phenomena observed with these techniques are not fully understood. For example, mysteries still surround data obtained with EEG. EEG shows that several different brain regions have characteristic rhythms or oscillations — one pattern in the visual cortex, another in the sensory motor cortex, and so on. Even though this method of examining brain activity has been used since 1929, the generation of these patterns (sometimes called brain waves) at the level of neural circuits is not well understood.

One branch of neuroscience that can help bridge findings from the microscopic to the whole-brain level is computational neuroscience. Researchers in this field develop theories or models about how the brain processes information, then test these models against real-world data. For example, they can examine the data and images from EEG or fMRI, then develop mathematical models to explain the underlying neuron and circuit activity. Data from the many methods discussed in this chapter — electrophysiology, molecular studies, anatomy, and functional brain scans — can all contribute to these computational models.

This chapter provides an introduction to research methods that have driven, and continue to drive, discovery in neuroscience. As new techniques and technologies emerge, scientists will add them to their repertoire of techniques that can deepen our understanding of the brain and suggest new ways to help people whose lives are affected by brain disorders.

Anatomy is the study of structure — most often, the structure of biological organisms. For the brain, anatomy starts with the structure of neurons, which are among the most complex and diverse cell types in our bodies. Scientists were first able to observe neurons in the late 19th century, thanks to histological techniques that start with a very thin slice of brain tissue to which scientists apply stains or other compounds that add contrast or color to specific structures. They then view the tissue with a light microscope, which passes visible light through the thin slice and lenses that make the structures look up to 1,000 times larger than they do with the naked eye.

Anatomy is the study of structure — most often, the structure of biological organisms. For the brain, anatomy starts with the structure of neurons, which are among the most complex and diverse cell types in our bodies. Scientists were first able to observe neurons in the late 19th century, thanks to histological techniques that start with a very thin slice of brain tissue to which scientists apply stains or other compounds that add contrast or color to specific structures. They then view the tissue with a light microscope, which passes visible light through the thin slice and lenses that make the structures look up to 1,000 times larger than they do with the naked eye.