CHAPTER5

Thinking, Planning & Language

From the moment you wake up, your brain is bombarded by stimuli: the sound of birds singing or the rumble of trucks, the smell of coffee, the brightness and warmth of sunlight streaming through your window. Fortunately, your brain is adept at filtering this flood of information and making a decision about what actions to take. Is it a workday or a weekend? What would taste good for breakfast? How warm a sweater do you need? Every moment you’re conscious, you are thinking, planning, and making decisions.

But how do you think? What is happening in our brains when we reflect on last night’s party or puzzle over what to wear today? Can other animals think the way that humans do? In order to think, your brain has to make sense of the noisy, chaotic world around you. The first filter for that information is your perception, which arises from the senses whose processing we considered in Chapter 2. The next step is interpreting those perceptions, which your brain does by comparing them to memories of past experiences and observations.

Constructing Representations

Because your brain’s capacity to store this information in short-term memory is limited, it builds fairly simple representations of people, places, objects, and events as references. To really make sense of our moment-to-moment perceptions, the brain relies on its complex network of associations assembled from prior experience. These connections enable your brain to deal with variable perceptions. For example, you can identify a dog even if it is a different breed or color than any you have seen before. A bicycle still registers as a bicycle, even if it is obscured so that only one wheel is visible.

Constructing these representations relies on semantic memory, a form of declarative knowledge that includes general facts and data. Scientists are just beginning to understand the nature and organization of cortical areas involved in semantic memory, but it appears that specific cortical networks are specialized for processing certain types of information. Studies using functional brain imaging have revealed regions of the cortex that selectively process different categories of information such as animals, faces, tools, or words.

Recordings of the electrical activity of individual brain cells show that specific, single cells may fire when someone looks at photographs of a particular person, but remain quiet when viewing photographs of other people, animals, or objects. So-called “concept cells” work together in assemblies. For example, the cells encoding the concepts of needle, thread, sewing, and button may be interconnected. Such cells, and their connections, form the basis of our semantic memory.

Concept cells reside in the temporal lobe, a brain area that specializes in object recognition. Scientists made great strides in understanding memory by studying H.M., a man with severe amnesia, who was discussed in Chapter 4. Similarly, our understanding of thinking and language has been informed by studying people with unique deficits caused by particular patterns of brain damage.

Consider the case of D.B.O., a 72-year old man who suffered multiple strokes. In tests run by researchers, D.B.O. could identify only 1 out of 20 different common objects by sight. He also struggled when he was asked to take a cup and fill it with water from the sink. He approached several different objects — a microwave, water pitcher, garbage can, and roll of paper towels — saying “This is a sink … Oh! This one could be a sink … This is also a sink,” before finally finding the real sink and filling the cup. But in striking contrast, he could easily identify objects when he closed his eyes and felt them; he could also name things that he heard, such as a rooster’s “cock-a-doodle-doo.”

Researchers concluded that D.B.O.’s strokes had damaged his brain in ways that prevented visual input from being conveyed to anterior temporal regions where semantic processing occurs. This blocked his access to the names of objects that he could see, but not his ability to name objects he could touch.

Regional Specialization and Organization

Experts have learned from people like D.B.O. that damage to certain areas of the temporal lobes leads to problems with recognizing and identifying visual stimuli. This condition, called agnosia, occurs in several forms, depending on the exact location of the brain damage.

One such region is the fusiform face area (FFA). Located on the underside of the temporal lobe, the FFA is critical for recognizing faces. This distinct area responds more strongly to images with than without faces, and bilateral damage to this area results in prosopagnosia or “face blindness.” Similarly, a nearby region called the parahippocampal place area responds to specific locations, such as pictures of buildings or particular scenes. Other areas are activated only by viewing certain inanimate objects, body parts, or sequences of letters.

Within these brain areas, information is organized into hierarchies, as complex skills and representations are built up by integrating information from simpler inputs. One example of this organization is the way the brain represents words. Regions that encode words include the posterior parietal cortex, parts of the temporal lobe, and regions in the prefrontal cortex (PFC). Together, these areas form the semantic system, a constellation that responds more strongly to words than to other sounds, and even more strongly to natural speech than to artificially garbled speech. The semantic system occupies a significant portion of the human brain, especially compared to the brains of other primates. This difference might help explain humans’ unique ability to use language.

Separate areas within this system encode representations of concrete or abstract concepts, action verbs, or social information. Words related to each other, such as “month” and “week,” tend to activate the same areas, whereas unrelated words, such as “month” and “tall,” are processed in separate areas of the brain. Many studies using a technique called functional magnetic resonance imaging (fMRI) to measure brain activity in response to words have found more extensive activation in the left hemisphere, compared to the right hemisphere. However, when words are presented in a narrative or other context, they elicit fMRI activity on both sides of the brain.

Written language involves additional brain areas. The visual word form area (VWFA) in the fusiform gyrus recognizes written letters and words — a finding that is remarkably consistent across speakers of different languages. Studies of the VWFA reveal connections between it and the brain areas that process visual information, bridges that help the brain link meaning to written language. Likewise, there are specific brain areas that represent numbers and their meaning. These concepts are represented in the parietal cortex with input from the occipitotemporal cortex, a region that participates in visual recognition and reading. These regions work together to identify the shape of a written number or symbol and connect it to its concept, which can be broad: For example, the number “3” is applied to sets of objects, the concept of trios, and the rhythm of a waltz.

Thus, through constructing hierarchical, connected representations of concepts, the brain is able to build meaning. All of these skills depend on the fluid and efficient retrieval and manipulation of semantic knowledge.

LANGUAGE PROCESSING

In mid-19th century France, a young man named Louis Victor Leborgne came to live at the Bicêtre Hospital in the suburbs south of Paris. Oddly, the only word he could speak was a single syllable: “Tan.” In the last few days of his life, he met a physician named Pierre Paul Broca. Conversations with the young man, whom the world of neuroscience came to know as Patient Tan, led Broca to understand that Leborgne could comprehend others’ speech and was responding as best he could, but “tan” was the only expression he was capable of uttering.

In mid-19th century France, a young man named Louis Victor Leborgne came to live at the Bicêtre Hospital in the suburbs south of Paris. Oddly, the only word he could speak was a single syllable: “Tan.” In the last few days of his life, he met a physician named Pierre Paul Broca. Conversations with the young man, whom the world of neuroscience came to know as Patient Tan, led Broca to understand that Leborgne could comprehend others’ speech and was responding as best he could, but “tan” was the only expression he was capable of uttering.

After Leborgne died, Broca performed an autopsy and found a large damaged area, or lesion, in a portion of the frontal lobe. Since then, we have learned that damage to particular regions within the left hemisphere produces specific kinds of language disorders, or aphasias. The portion of the frontal lobe where Leborgne’s lesion was located is still called Broca’s area, and it is vital for speech production. Further studies of aphasia have greatly increased our knowledge about the neural basis of language.

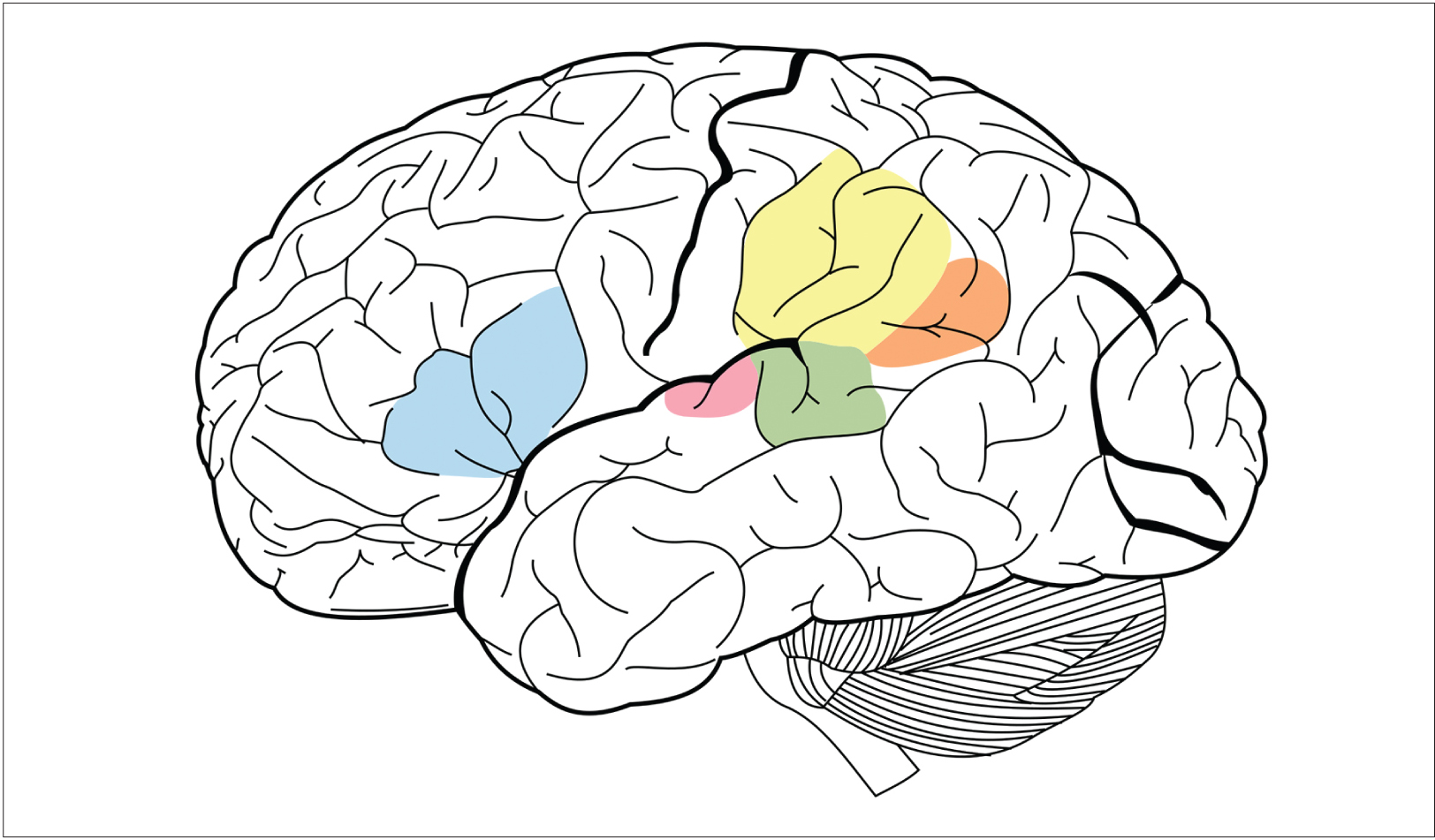

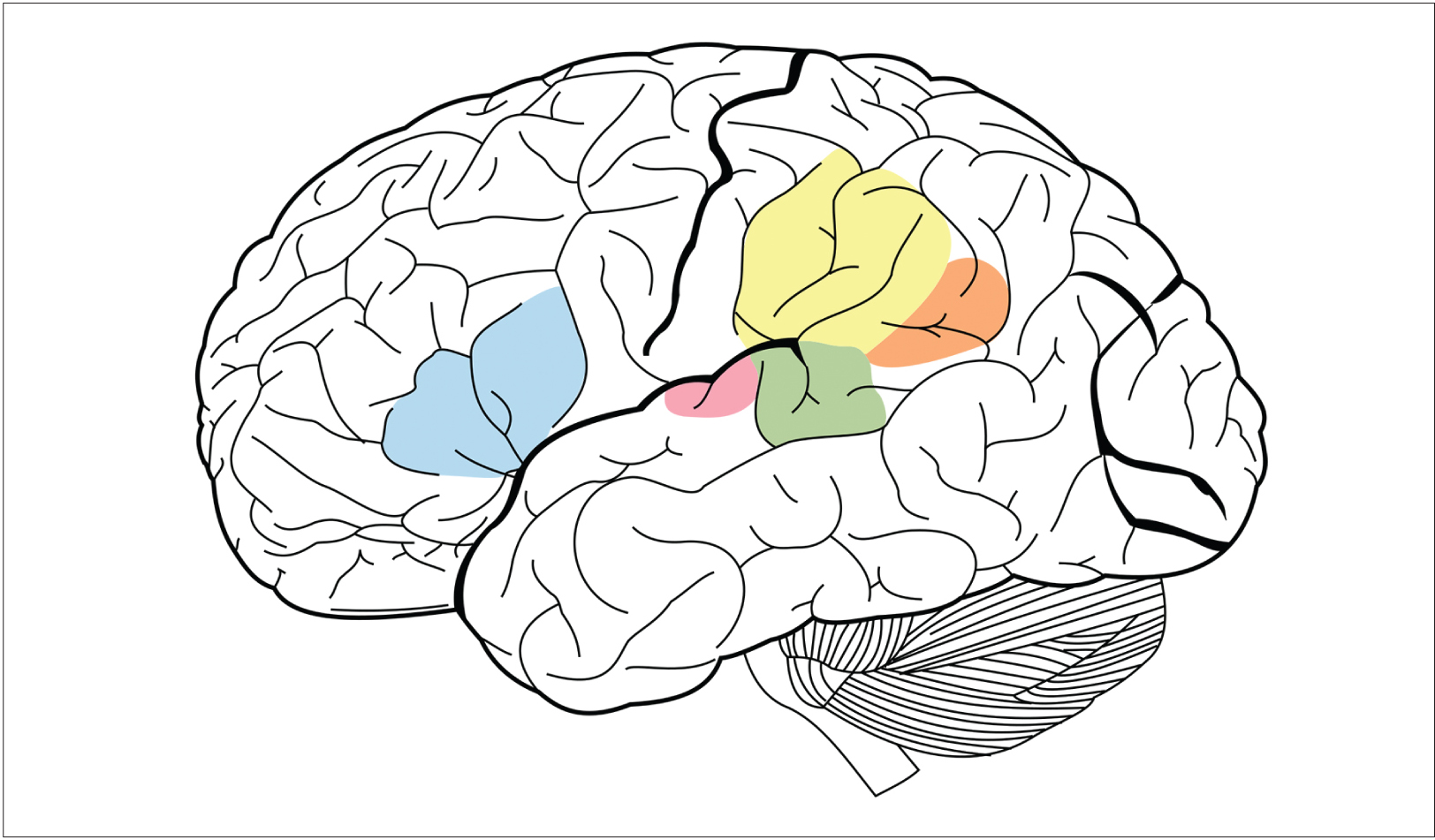

James.mcd.nz.

Language is a complex cognitive ability, involving several areas of the brain. The blue area in this image is Broca’s area, which is vital for speech production. The green area is Wernicke’s area, which is responsible for understanding others’ speech. They and other areas work in tandem for many types of communication.

Broca’s aphasia is also called “non-fluent” aphasia, because speech production is impaired but comprehension is mostly intact. Damage to the left frontal lobe can produce non-fluent aphasias, in which speech output is slow and halting, requires great effort, and often lacks complex word or sentence structure. But while their speaking is impaired, non-fluent aphasics still comprehend spoken language, although their understanding of complex sentences can be poor.

Shortly after Broca published his findings, a German physician, Carl Wernicke, wrote about a 59-year-old woman he referred to as S.A., who had lost her ability to understand speech.

Unlike patient Leborgne, S.A. could speak fluently, but her utterances made no sense: she offered absurd answers to questions, used made-up words, and had difficulty naming familiar items. After her death, Wernicke determined that she had damage in her left temporal lobe. This caused her difficulty in comprehending speech, but not producing it, a deficit that is now known as “Wernicke’s aphasia,” or “fluent aphasia.” Fluent aphasic patients might understand short individual words, and their speech can sound normal in tone and speed, but it is often riddled with errors in sound and word selection and tends to be unintelligible.

Another type of aphasia is called “pure word deafness,” which is caused by damage to the superior temporal lobes in both hemispheres. Patients with this disorder are unable to comprehend heard speech on any level. But they are not deaf. They can hear speech, music, and other sounds, and can detect the tone, emotion, and even the gender of a speaker. But they cannot link the sound of words to their meaning. (They can, however, make perfect sense of written language, because visual information bypasses the damaged auditory comprehension area of the temporal lobe.)

Although Broca and Wernicke’s work emphasized the role of the left hemisphere in speech and language ability, scientists now know that recognizing speech sounds and individual words actually involves both the left and right temporal lobes. Nonetheless, producing complex speech is strongly dependent on the left hemisphere, including the frontal lobe as well as posterior regions in the temporal lobe. These areas are critical for accessing appropriate words and speech sounds.

Reading and writing require the involvement of additional brain regions — those controlling vision and movement. Earlier, we mentioned that sensory processing of written words entails connections between the brain’s language areas and the areas that process visual perceptions. In the case of reading and writing, many of the same centers involved in speech comprehension and production are still essential, but require input from visual areas that analyze the shapes of letters and words, as well as output to the motor areas that control the hand.

New Insights in Language Research

Although our understanding of how the brain processes language is far from complete, recent molecular genetic studies of inherited language disorders have provided important new insights. One language-associated gene, called FOXP2, codes for a special type of protein that switches other genes on and off in particular parts of the brain. Rare mutations in FOXP2 result in difficulty making mouth and jaw movements in the sequences required for speech. The disability is also accompanied by difficulty with spoken and written language.

Although our understanding of how the brain processes language is far from complete, recent molecular genetic studies of inherited language disorders have provided important new insights. One language-associated gene, called FOXP2, codes for a special type of protein that switches other genes on and off in particular parts of the brain. Rare mutations in FOXP2 result in difficulty making mouth and jaw movements in the sequences required for speech. The disability is also accompanied by difficulty with spoken and written language.

Remarkably, many insights into human speech have come from studies of birds, where it is possible to induce genetic mutations and study their effects on singing. Just as human babies learn language during a special developmental period, baby birds learn their songs by imitating a vocal model (a parent or other adult bird) during an early critical period. Like babies’ speech, birds’ song-learning also depends on auditory feedback — their ability to hear their own attempts at imitation. Interestingly, studies have also revealed that FOXP2 mutations can disrupt song development in young birds, much as they do in humans.

Imaging studies have revealed that disruption of FOXP2 can severely affect signaling in the dorsal striatum, part of the basal ganglia located deep in the brain. Specialized neurons in the dorsal striatum express high levels of the product of FOXP2. Mutations in FOXP2 interrupt the flow of information through the striatum and result in speech deficits. These findings show the gene’s importance in regulating signaling between motor and speech regions of the brain. Changes in the nucleotide sequence of FOXP2 might have influenced the development of spoken language in humans and explain why humans speak and chimpanzees do not.

Functional imaging studies have also identified brain structures not previously known to be involved in language. For example, portions of the middle and inferior temporal lobe participate in accessing the meaning of words. In addition, the anterior temporal lobe is under intense investigation as a site that might participate in sentence-level comprehension. Recent work has also identified a sensory-motor circuit for speech in the left posterior temporal lobe, which is thought to help communication between the systems for speech recognition and speech production. This circuit is involved in speech development and is likely to support verbal short-term memory.

COGNITION AND EXECUTIVE FUNCTION

Executive Function

Some of the most complex processes in the brain occur in the prefrontal cortex (PFC), the outer, folded layers of the brain located just behind your forehead. The PFC is one of the last regions of the brain to develop, not reaching full maturity until adulthood. This is one reason why children’s brains function quite differently from those of adults. The processing that takes place in this area is known as executive function. Like the chief executive officer (CEO) of a company, the PFC supervises everything else the brain does, taking in sensory and emotional information and using this information to plan and execute decisions and actions.

Some of the most complex processes in the brain occur in the prefrontal cortex (PFC), the outer, folded layers of the brain located just behind your forehead. The PFC is one of the last regions of the brain to develop, not reaching full maturity until adulthood. This is one reason why children’s brains function quite differently from those of adults. The processing that takes place in this area is known as executive function. Like the chief executive officer (CEO) of a company, the PFC supervises everything else the brain does, taking in sensory and emotional information and using this information to plan and execute decisions and actions.

Specific areas of the PFC support executive functions such as selecting, rehearsing, and monitoring information being retrieved from long-term memory. To serve these functions, the PFC also interacts with a large network of posterior cortical areas that encode specific types of information — for example, visual images, sounds, words, and the spatial location in which events occurred.

Although more fully evolved in humans, some aspects of executive function are displayed by other animals. Studies in nonhuman primates have shown that neurons in the PFC keep information active or “in mind” while the animal is carrying out a task that depends on it. This is analogous to working memory in humans, which is a form of executive function.

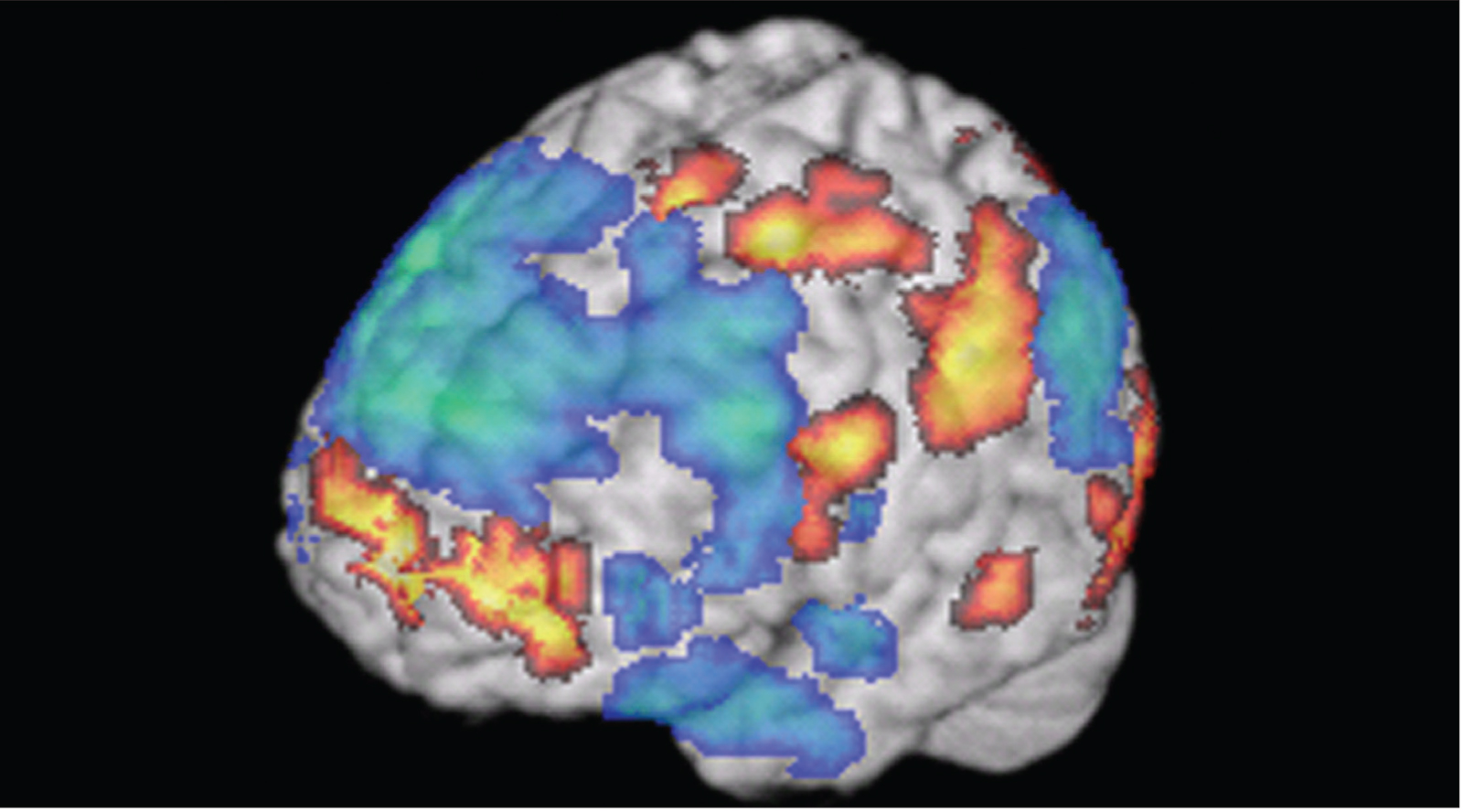

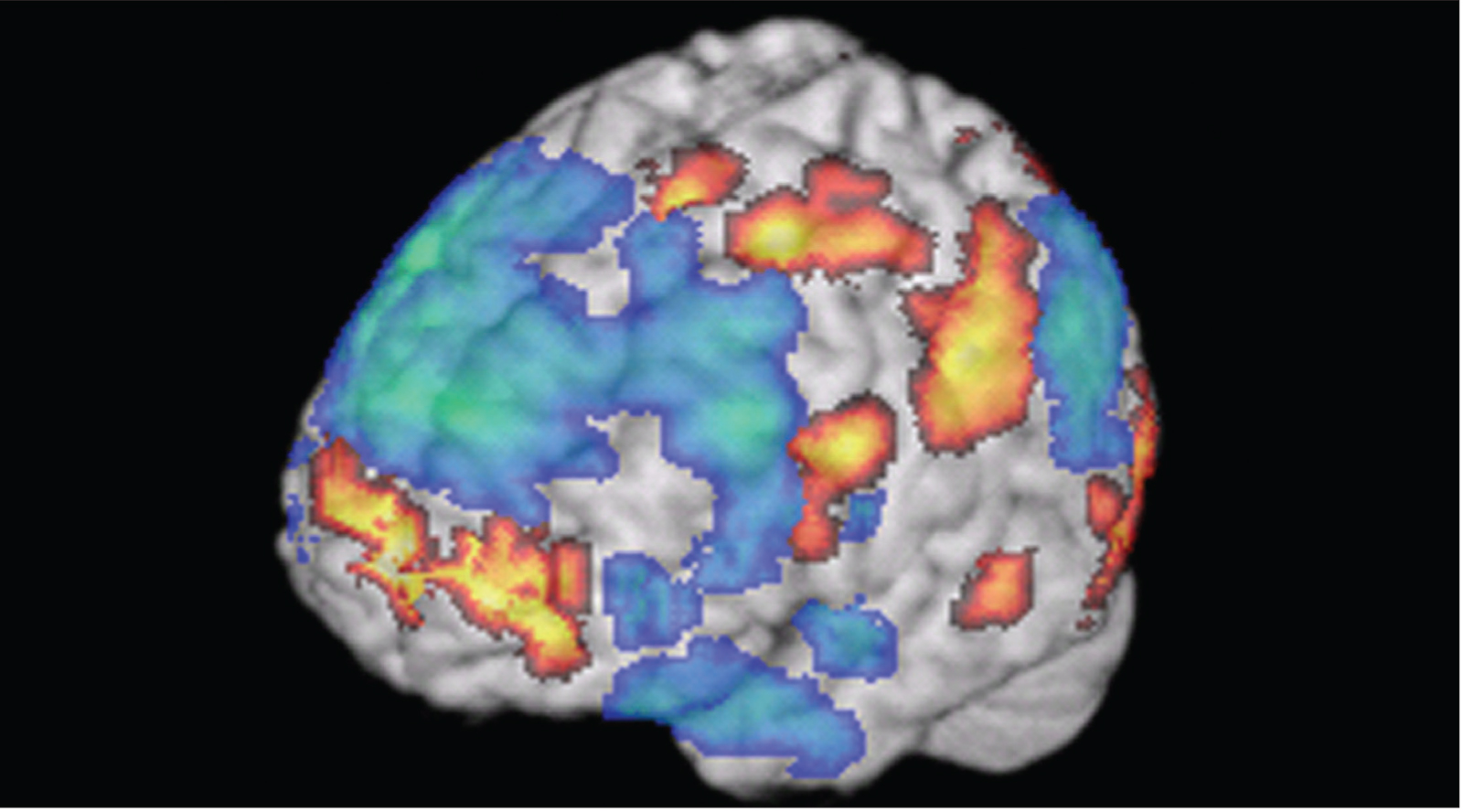

National Institute on Deafness and Other Communication Disorders, National Institutes of Health.

Scientists can observe the brain activity underlying advanced cognitive functions, such as creativity. In this image, researchers were able to measure activity in the brains of jazz musicians while they improvised. This gave them a clue as to what brain regions are associated with creative thinking.

Executive function can be considered a blend of three core skills: inhibition, working memory, and shifting. Inhibition is the ability to suppress a behavior or action when it is inappropriate — such as calling out loudly when one is in an audience or classroom. Even toddlers demonstrate hints of a developing inhibition ability, as shown in their ability to delay (for at least a short period of time) eating a treat placed in front of them. By the time children reach preschool, they can tackle more complex inhibition tasks, such as “Lucia’s hand game,” in which they are told to make a fist when shown a finger and a finger when shown a fist. This test, which requires inhibiting their more automatic imitation of adults, is very hard for three-year-olds, but four-year-olds perform significantly better. As people grow older, they wield this ability ever more skillfully.

In addition to inhibition, this hand game and similar tasks rely on working memory, which is the ability to hold a rule in mind while you decide how to act (in this case, opposite the demonstrator). When you have new experiences, information initially enters your working memory, a transient form of declarative or conscious memory. Working memory depends on both the PFC and the parietal lobe. It gives you the ability to maintain and manipulate information over a brief period of time without external aids or cues — such as remembering a phone number without writing it down. Most people can memorize and recite a string of numbers or words over a brief period of time, but if they are distracted or there is a time lag of many minutes or hours, they are likely to forget. This shows the duration of working memory, which requires active rehearsal and conscious focus to maintain.

The third key component of executive function is shifting, or mental flexibility, which allows you to adjust your ongoing behavior when conditions require it. For example, in the card sorting task, people must figure out (from the examiner’s simple “yes/no” responses) that they must switch from sorting by one rule, such as suit, and begin sorting by another, such as number. People with damage to their PFC have great difficulty doing this and tend to stick with the first sorting rule. Children’s ability to shift successfully between tasks follows a developmental course through adolescence. It appears that preschool-aged children can handle shifts between simple task sets in a card-sorting task and later handle unexpected shifts between increasingly complex task sets. Both behavioral and physiological measures indicate that the ability to monitor one’s errors is evident during adolescence; by mid-adolescence, more complex task switching reaches adult-like levels. Because of its greater need for multiple cognitive processes, mature shifting likely involves a network of activity in many regions of the PFC.

Many of the changes in executive functioning ability are gradual, although the changes are more apparent in young children. The PFC is the main region implicated in executive functioning; however, the skills that fall under this umbrella use inputs from all over the brain. Interestingly, the activity level associated with executive function actually decreases as children and adolescents mature, reflecting the fact that these circuits become more fine-tuned and efficient as the neuron networks mature.

Decision-Making

The fundamental skills of executive function — inhibition, working memory, and shifting — provide the basis for other skills. One of these is decision-making, which requires a person to weigh values, understand rules, plan for the future, and make predictions about the outcomes of choices.

The fundamental skills of executive function — inhibition, working memory, and shifting — provide the basis for other skills. One of these is decision-making, which requires a person to weigh values, understand rules, plan for the future, and make predictions about the outcomes of choices.

You make many different types of decisions every day. Some of these rely primarily on logical reasoning — for example, when you compare the timetables for the bus and subway to determine the quickest way to get to a friend’s house. Other decisions have emotional consequences at stake, like when the person you’re trying to impress offers you a cigarette — your desire to be accepted might outweigh your rational consideration of smoking’s harms. This is an example of affective decision-making (Chapter 4).

Both types of decision-making involve the brain’s prefrontal cortex (PFC). In particular, activity in the lateral PFC is especially important in overriding emotional responses in decision-making. The area’s strong connections with brain regions related to motivation and emotion, such as the amygdala and nucleus accumbens, seem to exert a sort of top-down control over emotional and impulsive responses. For example, brain imaging studies have found the lateral PFC is more active in people declining a small monetary reward given immediately in favor of receiving a larger reward in the future. This is one of the last areas of the brain to mature — usually in a person’s late 20s — which explains why teens have trouble regulating emotions and controlling impulses.

The orbitofrontal cortex, a region of the PFC located just behind the eyes, appears to be important in affective decision-making, especially in situations involving reward and punishment. The area has been implicated in addiction as well as social behavior.

Social Neuroscience

Humans, like many other animals, are highly social creatures. Accordingly, large parts of our brain are dedicated to processing information about other people. Social neuroscience refers to the study of neural functions that underlie interpersonal behavior, such as reading social cues, understanding social rules, choosing socially-appropriate responses, and understanding oneself and others. The latter process is known as “mentalizing” — making sense of your own thought processes and those of others. The medial PFC, as well as some areas of the lateral PFC, are highly involved in these skills.

Humans, like many other animals, are highly social creatures. Accordingly, large parts of our brain are dedicated to processing information about other people. Social neuroscience refers to the study of neural functions that underlie interpersonal behavior, such as reading social cues, understanding social rules, choosing socially-appropriate responses, and understanding oneself and others. The latter process is known as “mentalizing” — making sense of your own thought processes and those of others. The medial PFC, as well as some areas of the lateral PFC, are highly involved in these skills.

Mentalizing underlies some of our most complex and fascinating mental abilities. These include empathy and “theory of mind,” which is understanding the mental states of others and the reasons for their actions. Until recently, research devoted little emphasis to the social and emotional abilities needed for these higher-order mental functions, but now such topics are being avidly studied.

An obvious way that we understand the mental states of others is by observing their actions. This requires the brain to see and recognize others’ movements and facial expressions, and then draw inferences about the feelings and intentions that drive them. Scientists have learned how brain activity drives these processes by scanning people’s brains with fMRI as subjects watch video clips of other people.

Several regions in the medial prefrontal cortex help us make judgments about ourselves and others. In addition, a specific region at the border of temporal and parietal lobes, the temporoparietal junction (TPJ), appears to focus on others and not on the self. The TPJ is also activated when we watch others engage in actions that seem at odds with their intentions or in actions intended to be deceptive.

A popular, though controversial, theory of social cognition centered on the discovery of “mirror neurons.” In the 1990’s, scientists identified neurons in the motor cortex of rhesus macaques that fired when the monkeys performed a specific action. They were astonished to find these neurons also fired when the monkeys simply watched another person or monkey perform that same action. The findings prompted speculation that mirror neurons underlie our ability to understand another person’s actions. Additional studies revealed humans also possessed mirror neurons, and in even wider brain networks.

Mirror neurons permeated popular media. Within a decade of their discovery, however, mirror neurons’ role in social cognition was called into question — many scientists argued that there was little direct evidence supporting mirror neurons’ purported roles in theory of mind, mentalizing, and empathy.

Researchers are continuing to investigate mirror neurons, as well as the complexities of the human brain that allow us understand and empathize with others.

In mid-19th century France, a young man named Louis Victor Leborgne came to live at the Bicêtre Hospital in the suburbs south of Paris. Oddly, the only word he could speak was a single syllable: “Tan.” In the last few days of his life, he met a physician named Pierre Paul Broca. Conversations with the young man, whom the world of neuroscience came to know as Patient Tan, led Broca to understand that Leborgne could comprehend others’ speech and was responding as best he could, but “tan” was the only expression he was capable of uttering.

In mid-19th century France, a young man named Louis Victor Leborgne came to live at the Bicêtre Hospital in the suburbs south of Paris. Oddly, the only word he could speak was a single syllable: “Tan.” In the last few days of his life, he met a physician named Pierre Paul Broca. Conversations with the young man, whom the world of neuroscience came to know as Patient Tan, led Broca to understand that Leborgne could comprehend others’ speech and was responding as best he could, but “tan” was the only expression he was capable of uttering.