CHAPTER2

Senses & Perception

You can think of your sense organs as the brain’s windows on the external world. The world itself has no actual images, sounds, tastes, and smells. Instead, you are surrounded by different types of energy and molecules that must be translated into perceptions or sensations. For this extraordinary transformation to work, your sense organs turn stimuli such as light waves or food molecules into electrical signals through the process of transduction. These electrical messages are then carried through a network of cells and fibers to specialized areas of your brain where they are processed and integrated into a seamless perception of your surroundings.

VISION

Vision is one of your most complicated senses, involving many processes that work simultaneously enabling you to see what is happening around you. It is no surprise, then, that the visual system involves about 30 percent of humans’ cerebral cortex — more than any other sense does. Vision has been studied intensively, and we now know more about it than any other sensory system. Knowledge of how light energy is converted into electrical signals comes primarily from studies of fruit flies (Drosophila) and mice. Higher-level visual processing has mostly been studied in monkeys and cats.

In many ways, seeing with your eyes is similar to taking pictures with an old-fashioned camera. Light passes through the cornea and enters the eye through the pupil. The iris regulates how much light enters by changing the size of the pupil. The lens then bends the light so that it focuses on the inner surface of your eyeball, on a sheet of cells called the retina. The rigid cornea does the initial focusing, but the lens can thicken or flatten to bring near or far objects into better focus on the retina. Much like a camera capturing images on film, visual input is mapped directly onto the retina as a two-dimensional reversed image. Objects to the right project images onto the left side of the retina and vice versa; objects above are imaged at the lower part and vice versa. After processing by specialized cells in several layers of the retina, signals travel via the optic nerves to other parts of your brain and undergo further integration and interpretation.

The Three-Layered Retina

The retina is home to three types of neurons — photoreceptors, interneurons, and ganglion cells — which are organized into several layers. These cells communicate extensively with each other before sending information along to the brain. Counterintuitively, the light-sensitive photoreceptors — rods and cones — are located in the most peripheral layer of the retina. This means that after entering through the cornea and lens, light travels through the ganglion cells and interneurons before it reaches the photoreceptors. Ganglion cells and interneurons do not respond directly to light, but they process and relay information from the photoreceptors; the axons of ganglion cells exit the retina together, forming the optic nerve.

The retina is home to three types of neurons — photoreceptors, interneurons, and ganglion cells — which are organized into several layers. These cells communicate extensively with each other before sending information along to the brain. Counterintuitively, the light-sensitive photoreceptors — rods and cones — are located in the most peripheral layer of the retina. This means that after entering through the cornea and lens, light travels through the ganglion cells and interneurons before it reaches the photoreceptors. Ganglion cells and interneurons do not respond directly to light, but they process and relay information from the photoreceptors; the axons of ganglion cells exit the retina together, forming the optic nerve.

There are approximately 125 million photoreceptors in each human eye, and they turn light into electrical signals. The process of converting one form of energy into another occurs in most sensory systems and is known as transduction. Rods, which make up about 95 percent of photoreceptors in humans, are extremely sensitive, allowing you to see in dim light. Cones, on the other hand, pick up fine detail and color, allowing you to engage in activities that require a great deal of visual acuity. The human eye contains three types of cones, each sensitive to a different range of colors (red, green, or blue). Because their sensitivities overlap, differing combinations of the three cones’ activity convey information about every color, enabling you to see the familiar color spectrum. In that way, your eyes resemble computer monitors that mix red, green, and blue levels to generate millions of colors.

Because the center of the retina contains many more cones than other retinal areas, vision is sharper here than in the periphery. In the very center of the retina is the fovea, a small pitted area where cones are most densely packed. The fovea contains only red and green cones and can resolve very fine details. The area immediately around the fovea, the macula, is critical for reading and driving. In the United States and other developed countries, death or degeneration of photoreceptors in the macula, called macular degeneration, is a leading cause of blindness in people older than 55.

Neurons in each of the three layers of the retina typically receive inputs from many cells in the preceding layer, but the total number of inputs varies widely across the retina. For example, in the macular region where visual acuity is highest, each ganglion cell receives input (via one or more interneurons) from just one or very few cones, allowing you to resolve very fine details. Near the margins of the retina, however, each ganglion cell receives signals from several photoreceptor cells. This convergence of inputs explains why your peripheral vision is less detailed. The portion of visual space providing input to a single ganglion cell is called its receptive field.

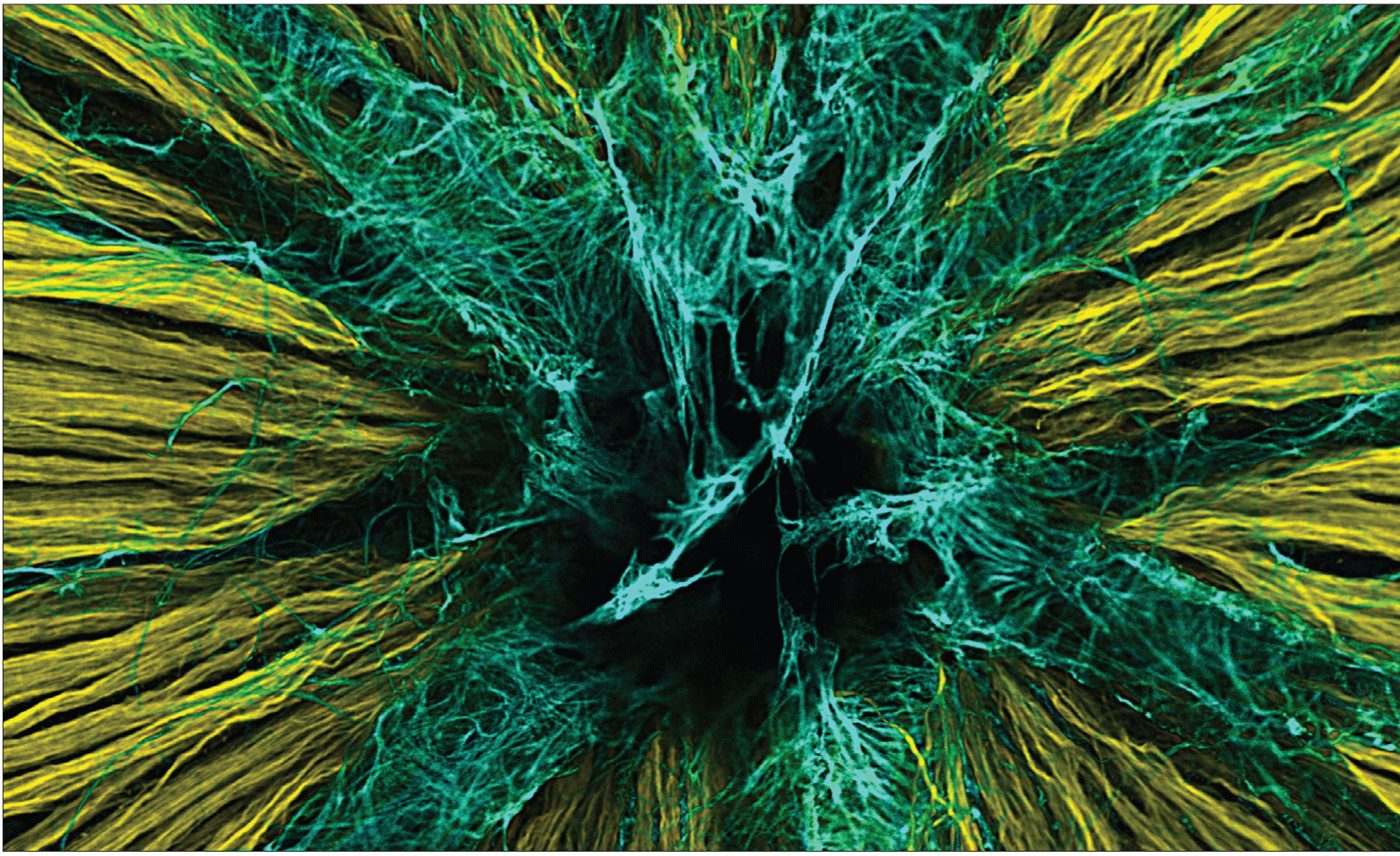

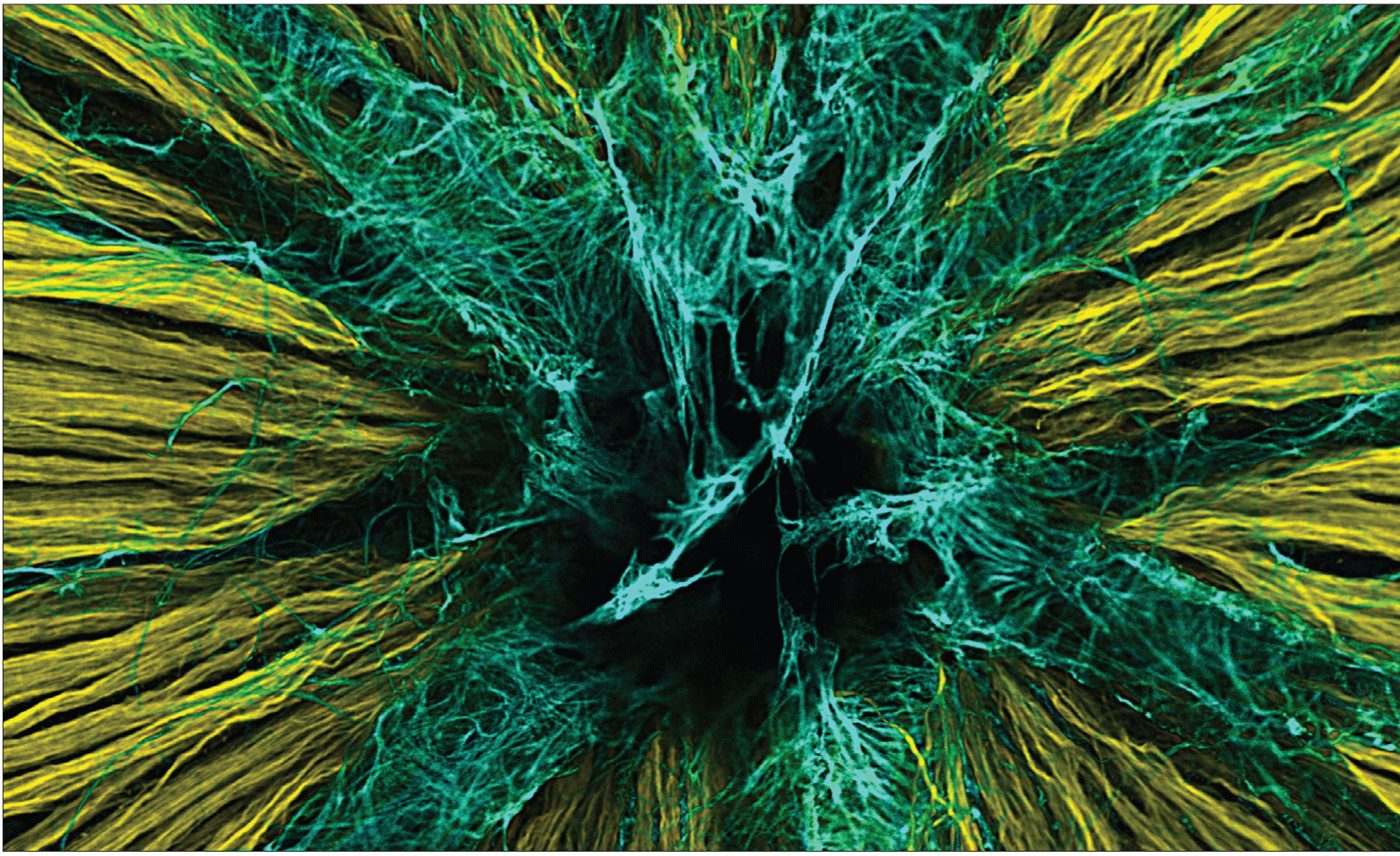

Ward, et al. The Journal of Neuroscience, 2014.

Here, in the back of the eye, is one of the first stops visual information makes on its way to the brain. In this image of a mouse retina, axons of nerve cells are labeled in yellow. They extend through a small opening in the back of the eye — labeled in black — through the optic nerve to higher vision centers. The axons must penetrate another layer of cells known as astrocytes, labeled in blue, that provide nutritional support to the retina.

How Is Visual Information Processed?

Every time you open your eyes, you distinguish shapes, colors, contrasts and the speed and direction of movements. You can easily distinguish your coffee mug from the peanut butter jar in front of you. You can also tell that the tree outside the window stands still and the squirrel is scurrying up the tree (not vice versa). But how is a simple two-dimensional retinal image processed to create such complex imagery?

Every time you open your eyes, you distinguish shapes, colors, contrasts and the speed and direction of movements. You can easily distinguish your coffee mug from the peanut butter jar in front of you. You can also tell that the tree outside the window stands still and the squirrel is scurrying up the tree (not vice versa). But how is a simple two-dimensional retinal image processed to create such complex imagery?

Visual processing begins with comparing the amounts of light hitting small, adjacent areas on the retina. The receptive fields of ganglion cells “tile” the retina, providing a complete two-dimensional representation (or map) of the visual scene. The receptive field of a ganglion cell is activated when light hits a tiny region on the retina that corresponds to the center of its field; it is inhibited when light hits the donut-shaped area surrounding the center. If light strikes the entire receptive field — the donut and its hole — the ganglion cell responds only weakly. This center-surround antagonism is the first way our visual system maximizes the perception of contrast, which is key to object detection.

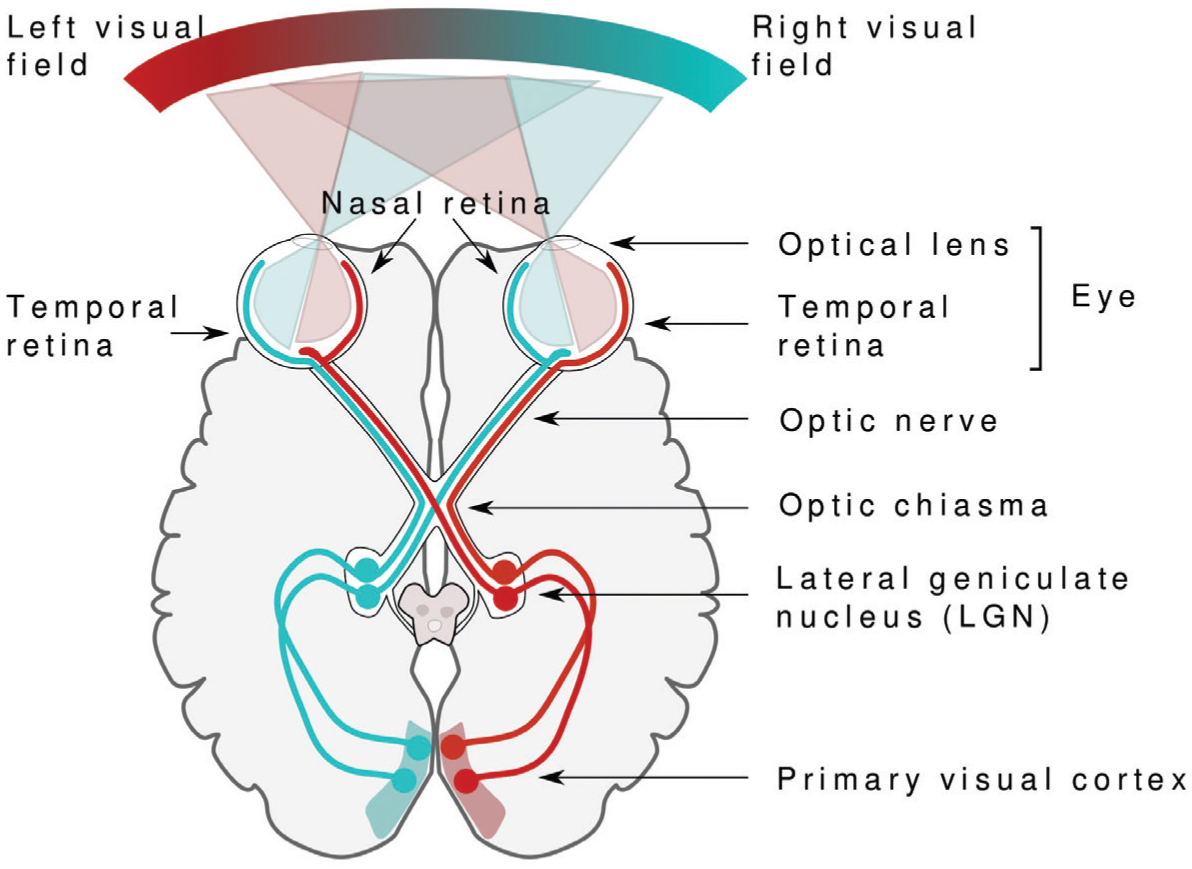

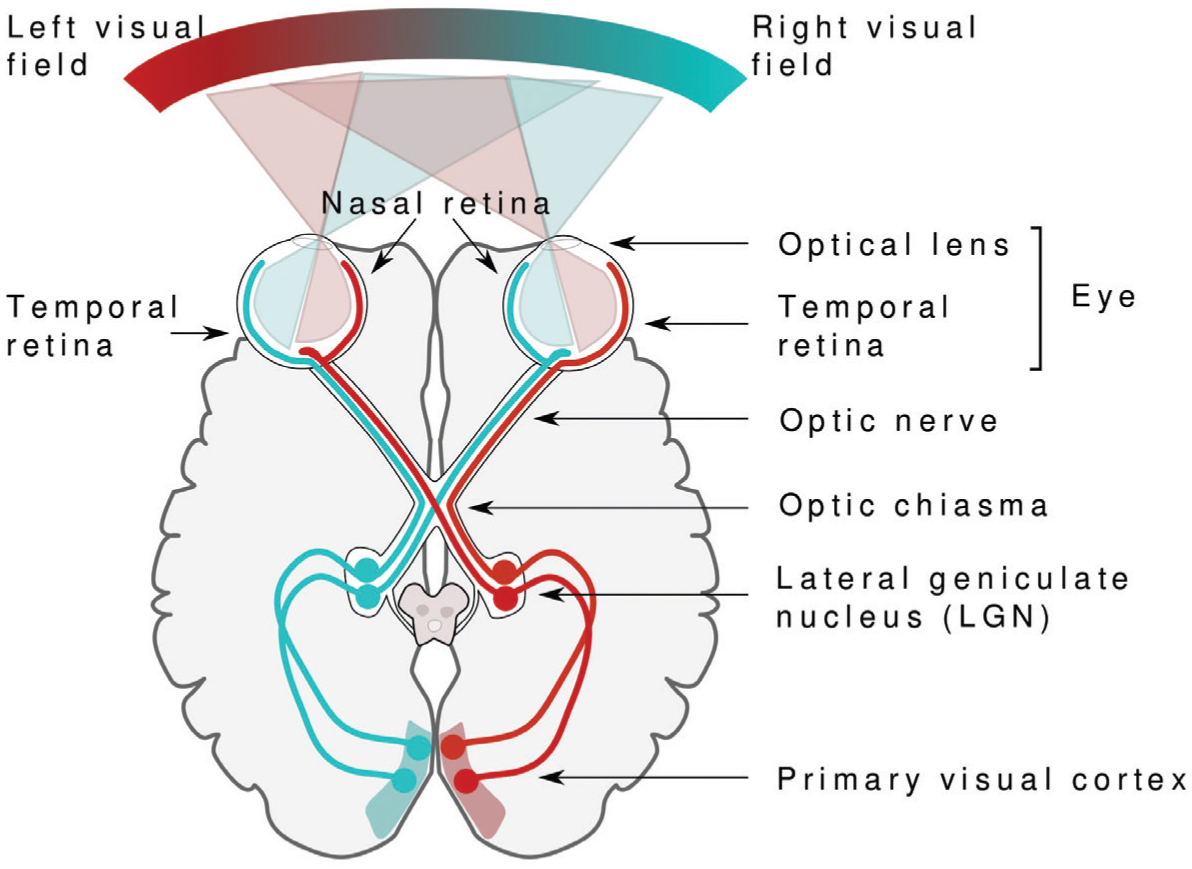

Neural activity in the axons of ganglion cells is transmitted via the optic nerves, which exit the back of each eye and travel toward the back of the brain. Because there are no photoreceptors at this site, the exit point of the optic nerve results in a small “blind spot” in each eye, which our brains fortuitously “fill in” using information from the other eye. On their way to the brain, signals travel along nerve fibers from both eyes which first converge at a crossover junction called the optic chiasm. Those fibers carrying information from the left side of the retinas of both eyes continue together on the left side of the brain; information from the right side of both retinas proceeds on the right side of the brain. Visual information is then relayed through the lateral geniculate nucleus, a region of the thalamus, and then to the primary visual cortex at the rear of the brain.

Miquel Perelló Nieto.

Vision begins with light. The light bouncing off an object passes through the optical lens and hits the retina at the back of the eye. Receptors in the retina transform light into electrical signals that carry information to the vision processing centers in the brain.

Visual Cortex: Layers, Angles, and Streams

The primary visual cortex, a thin sheet of neural tissue no larger than a half-dollar, is located in the occipital lobe at the back of your brain. Like the retina, this region consists of many layers with densely packed cells. The middle layer, which receives messages from the thalamus, has receptive fields similar to those in the retina and can preserve the retina’s visual map. Cells above and below the middle layer have more complex receptive fields, and they register stimuli shaped like bars or edges or with particular orientations. For example, specific cells can respond to edges at a certain angle or moving in a particular direction. From these layers of cells, new processing streams pass the information along to other parts of the visual cortex. As visual information from the primary visual cortex is combined in other areas, receptive fields become increasingly complex and selective. Some neurons at higher levels of processing, for example, respond only to specific objects and faces.

Studies in monkeys suggest that visual signals are fed into several parallel but interacting processing streams. Two of these are the dorsal stream, which heads up toward the parietal lobe, and the ventral stream, which heads down to the temporal lobe. Traditionally, these streams were believed to carry out separate processing of unconscious vision, which guides behavior and conscious visual experiences. If you see a dog running out into the street, the ventral or “What” stream would integrate information about the dog’s shape and color with memories and experiences that let you recognize the dog as your neighbor’s. The dorsal or “Where” stream would combine various spatial relationships, motion, and timing to create an action plan, but without a need for conscious thought. You might shout out “Stop!” without thinking. Ongoing research now questions this strict division of labor and suggests that crosstalk between streams may actually create a conscious experience. Clearly, in recognizing an image the brain extracts information at several stages, compares it with past experiences, and passes it to higher levels for processing.

Eyes Come in Pairs

Seeing with two eyes, called binocular vision, allows you to perceive depth or three dimensions, because each eye sees an object from a slightly different angle. This only works if the eyes’ visual fields overlap and if both eyes are equally active and properly aligned. A person with crossed eyes, a condition called strabismus, misses out on much depth perception. Information from the perspective of each eye is preserved all the way to the primary visual cortex where it is processed further. Two eyes also allow a much larger visual field to be mapped onto the primary visual cortex. Because some of the nerve fibers exiting each eye cross over at the optic chiasm, signals from the left visual field end up on the right side of the brain and vice versa, no matter which eye the information comes from. A similar arrangement applies to movement and touch. Each half of the cerebrum is responsible for processing information from the opposite side of the body.

Treating Visual Disorders

Many research studies using animals have provided insights into treatment of diseases that affect eyesight. Research with cats and monkeys has helped us find better therapies for strabismus. Children with strabismus initially have good vision in each eye but, because they cannot fuse the images coming from both eyes, they start to favor one eye and often lose vision in the other. Vision can be restored in such cases, but only if the child is treated at a young age; beyond the age of 8 or so, the blindness becomes permanent. Until a few decades ago, ophthalmologists waited until children were 4 years old before operating to align the eyes, prescribing exercises or using an eye patch. Now strabismus is corrected well before age 4, when normal vision can still be restored.

Many research studies using animals have provided insights into treatment of diseases that affect eyesight. Research with cats and monkeys has helped us find better therapies for strabismus. Children with strabismus initially have good vision in each eye but, because they cannot fuse the images coming from both eyes, they start to favor one eye and often lose vision in the other. Vision can be restored in such cases, but only if the child is treated at a young age; beyond the age of 8 or so, the blindness becomes permanent. Until a few decades ago, ophthalmologists waited until children were 4 years old before operating to align the eyes, prescribing exercises or using an eye patch. Now strabismus is corrected well before age 4, when normal vision can still be restored.

Loss of function or death of photoreceptors appears to lie at the heart of various disorders that cause blindness. Unfortunately, many are difficult to treat. Extensive genetic studies and the use of model organisms have identified a variety of genetic defects that cause people to go blind, making it possible to design gene or stem cell therapies that can recover photoreceptors. Researchers are working on potential treatments for genetic blindness, and gene therapies have already enabled some patients with loss of central vision (macular degeneration) or other forms of blindness to see better. Work is also underway to send electrical signals directly to the brain via ganglion cells rather than attempting to restore lost photoreceptors, an approach very similar to the use of cochlear implants to treat deafness.

HEARING

Hearing is one of your most important senses, alerting you to an approaching car and telling you where it’s coming from long before it comes into sight. Hearing is also central to social interactions. It allows you to communicate with others by processing and interpreting complex messages in the form of speech sounds. Like the visual system, your hearing (auditory) system picks up several qualities of the signals it detects, such as a sound’s pitch, loudness, duration, and location. Your auditory system analyzes complex sounds, breaking them into separate components or frequencies, as a result, you can follow particular voices in a conversation or instruments as you listen to music.

Can You Hear Me Now?

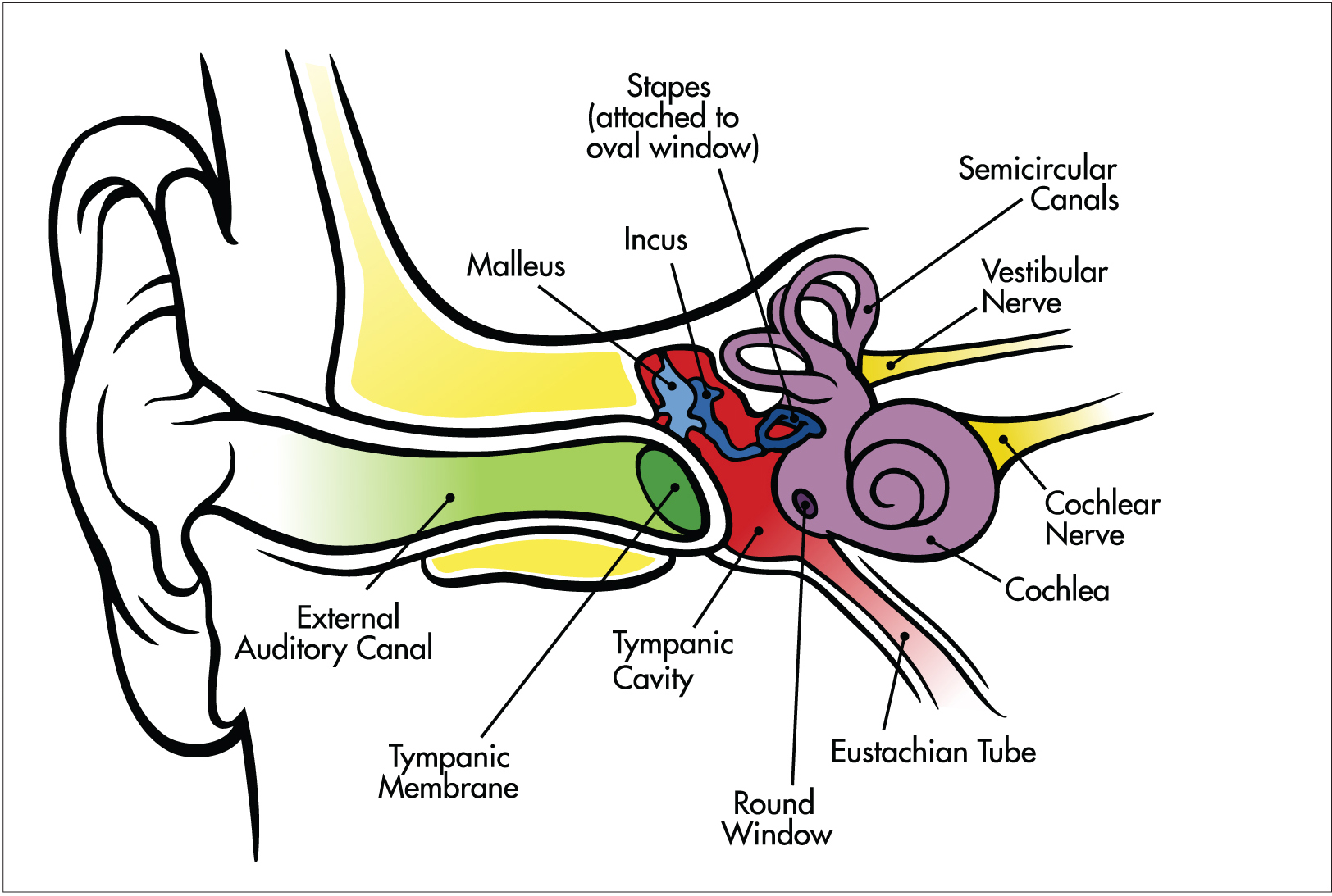

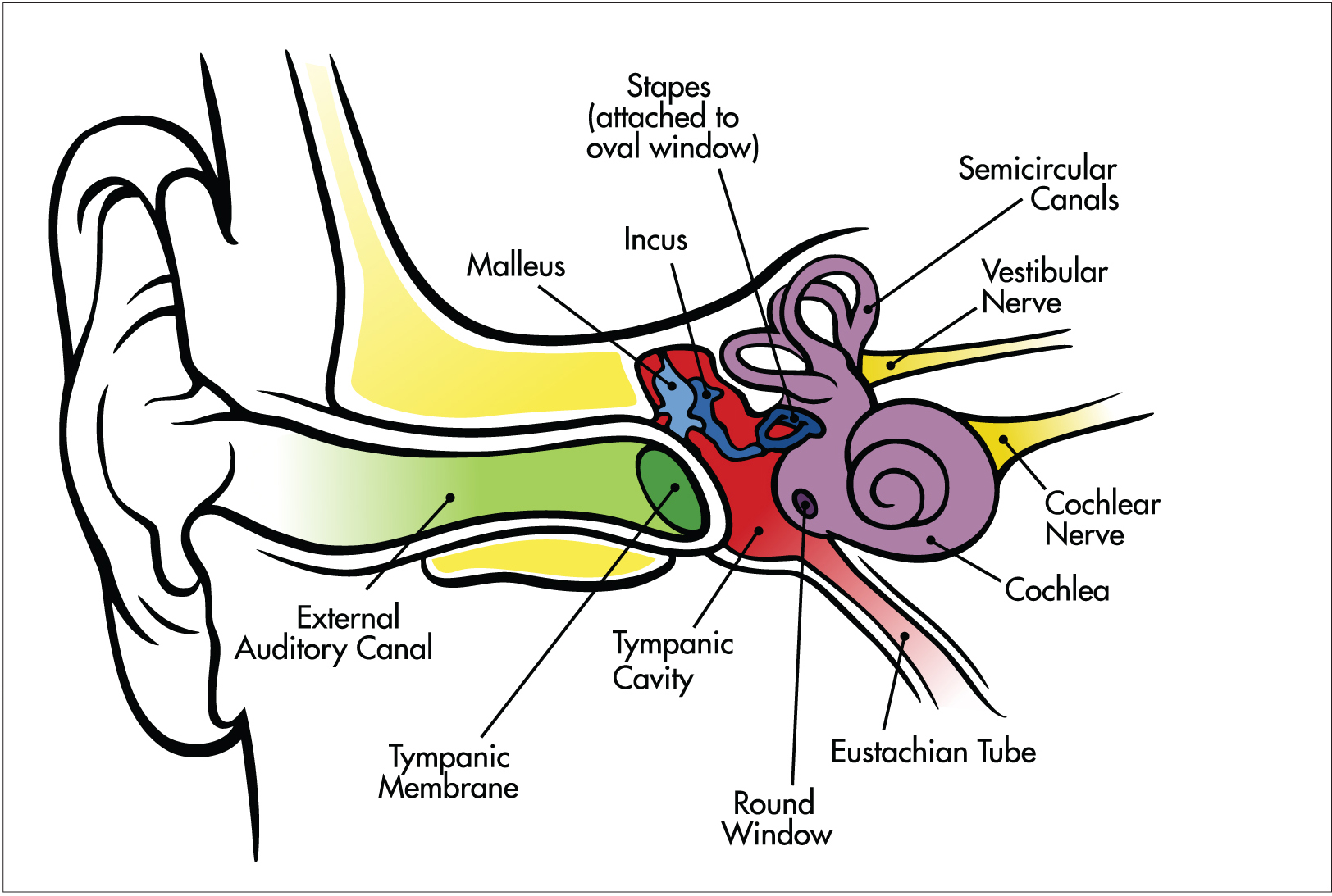

Whether it’s the dreaded alarm in the morning, the ringtone on your cell phone, or your favorite jogging music, hearing involves a series of steps that convert sound waves in the air into electrical signals that are carried to the brain by nerve cells. Sound in the form of air pressure waves reaches the pinnae of your ears, where the waves are funneled into each ear canal to reach the eardrum (tympanic membrane). The eardrum vibrates in response to these changes in air pressure, sending these vibrations to three tiny, sound-amplifying bones in the middle ear: the malleus (hammer), incus (anvil), and stapes (stirrup). The last bone in the chain (the stapes) acts like a tiny piston, pushing on the oval window, a membrane that separates the airfilled middle ear from the fluid-filled, snail-shell-shaped cochlea of the inner ear. The oval window converts the mechanical vibrations of the stapes into pressure waves in the fluid of the cochlea, where they are transduced into electrical signals by specialized receptor cells (hair cells).

From Pressure Wave to Electrical Signal

An elastic membrane, called the basilar membrane, runs along the inside of the cochlea like a winding ramp, spiraling from the outer coil, near the oval window, to the innermost coil. The basilar membrane is “tuned” along its length to different frequencies (pitches). When fluid inside the cochlea ripples, the membrane moves, vibrating to higher-pitched sounds (like the screech of audio feedback) near the oval window and to lower-pitched sounds (like a bass drum) in the center.

An elastic membrane, called the basilar membrane, runs along the inside of the cochlea like a winding ramp, spiraling from the outer coil, near the oval window, to the innermost coil. The basilar membrane is “tuned” along its length to different frequencies (pitches). When fluid inside the cochlea ripples, the membrane moves, vibrating to higher-pitched sounds (like the screech of audio feedback) near the oval window and to lower-pitched sounds (like a bass drum) in the center.

Rows of small sensory hair cells are located on top of the vibrating basilar membrane. When the membrane moves up and down, microscopic hair-like stereocilia extending from the hair cells bend against an overlying structure called the tectorial membrane. This bending opens small channels in the stereocilia that allow ions in the surrounding fluid to rush in, converting the physical movement into an electrochemical signal. Hair cells stimulated in this way then excite the auditory nerve, which sends its electrical signals on to the brainstem.

The next stop for sound processing is the thalamus, the brain’s relay station for incoming sensory information, which then sends the information into the auditory part of the cerebral cortex. Several thousand hair cells are positioned along the length of the basilar membrane. Each hair cell responds most strongly to just a narrow range of sound frequencies, depending on how far along the cochlea it is located. Thus, each nerve fiber connecting with the hair cells is tuned to very specific frequencies and carries this information into the brain.

Making Sense of Sound

On the way to the cortex, the brainstem and thalamus use the information from both ears to compute a sound’s direction and location. The frequency map of the basilar membrane is maintained throughout, even in the primary auditory cortex in the temporal lobe, where different auditory neurons respond to different frequencies. Some cortical neurons, however, respond to sound qualities such as intensity, duration, or a change in frequency. Other neurons are selective for complex sounds, while still others specialize in various combinations of tones. At higher levels, beyond the primary auditory cortex, neurons are able to process harmony, rhythm, and melody, and combine the types of auditory information into a voice or instrument that you can recognize.

On the way to the cortex, the brainstem and thalamus use the information from both ears to compute a sound’s direction and location. The frequency map of the basilar membrane is maintained throughout, even in the primary auditory cortex in the temporal lobe, where different auditory neurons respond to different frequencies. Some cortical neurons, however, respond to sound qualities such as intensity, duration, or a change in frequency. Other neurons are selective for complex sounds, while still others specialize in various combinations of tones. At higher levels, beyond the primary auditory cortex, neurons are able to process harmony, rhythm, and melody, and combine the types of auditory information into a voice or instrument that you can recognize.

Although sound is processed on both sides of the brain, the left side is typically responsible for understanding and producing speech. Someone with damage to the left auditory cortex (particularly a region called Wernicke’s area), as from a stroke, is able to hear a person speak but no longer understands what is being said.

Treating Hearing Loss

Loss of hair cells is responsible for the majority of cases of hearing loss. Unfortunately, once they die, hair cells don’t regrow. Current research is therefore focusing on how inner ear structures like hair cells develop and function, exploring new avenues for treatment that could eventually involve neurogenesis with the goal of replacing damaged hair cells.

Chittka, et. al. PLoS Biology, 2005.

Sound waves — vibrations in the air caused by the sound’s source — are picked up by the outer ear and funneled down the auditory canal to the ear drum. There, the malleus (hammer) transfers vibrations to the incus (anvil) and then onto the stapes. Hair cells in the cochlea convert the information in these vibrations to electrical signals, which are sent to the brain via the cochlear nerve.

TASTE AND SMELL

The senses of taste (gustation) and smell (olfaction) are closely linked and help you navigate the chemical world. Just as sound is the perception of air pressure waves and sight is the perception of light, smell and taste are your perceptions of tiny molecules in the air and in your food. Both of these senses contribute to how food tastes, and both are important to survival, because they enable people to detect hazardous substances they might inhale or ingest. The cells processing taste and smell are exposed to the outside environment, leaving them vulnerable to damage. Because of this, taste receptor cells regularly regenerate, as do olfactory receptor neurons. In fact, olfactory neurons are the only sensory neurons that are continually replaced throughout our lives.

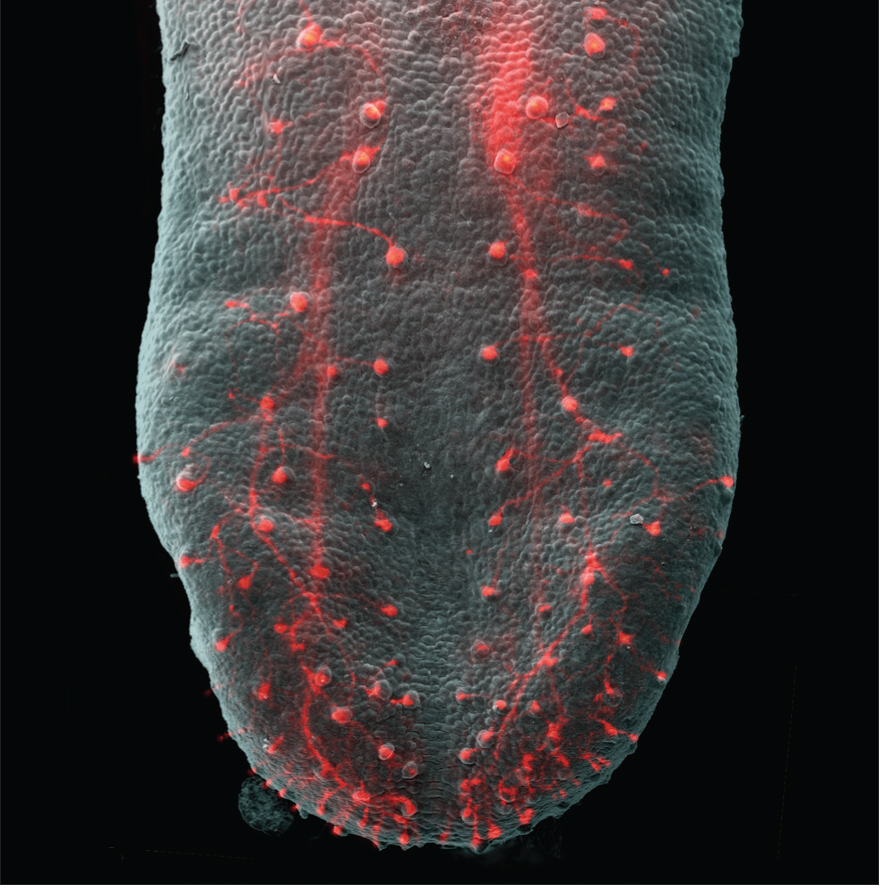

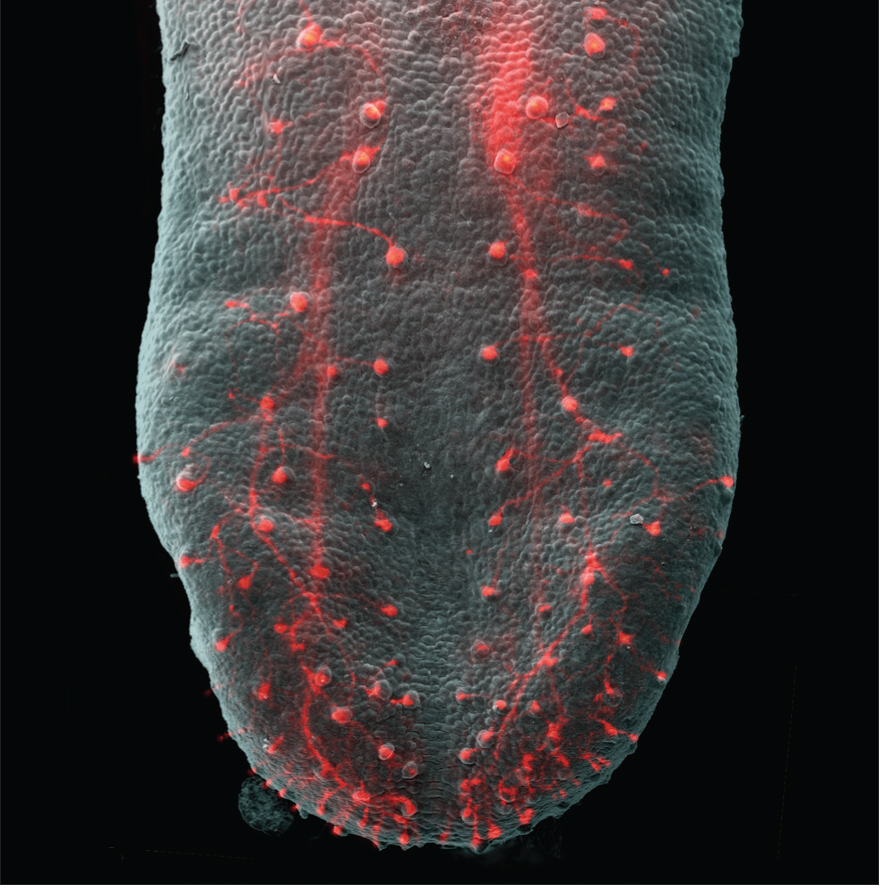

Ma, et al. The Journal of Neuroscience, 2009.

Your tongue’s receptors, called taste buds, transform information about tastes and send them to the brain to be processed into your favorite flavors. In this image of a mouse tongue, the axons that connect to these receptors are highlighted in red.

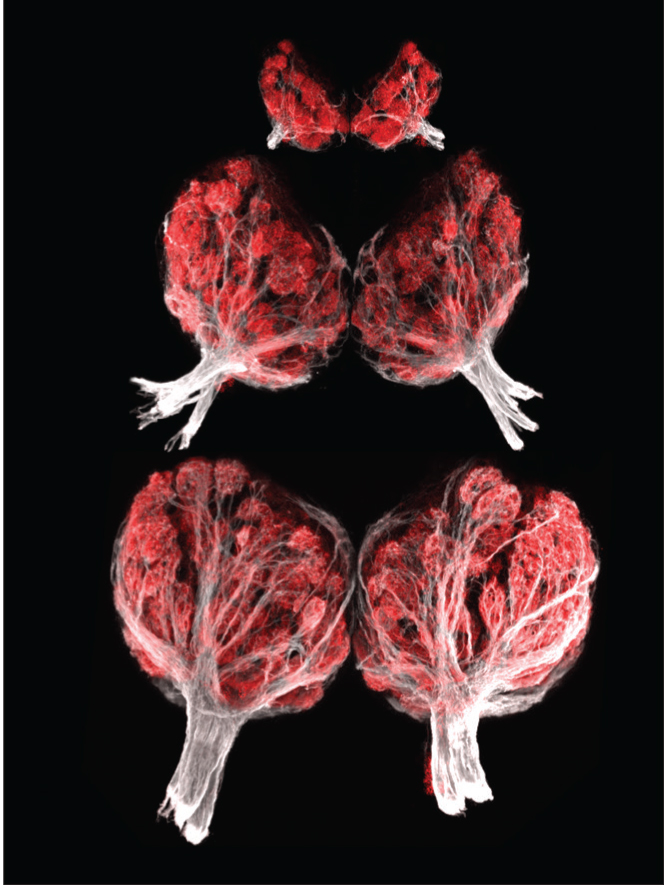

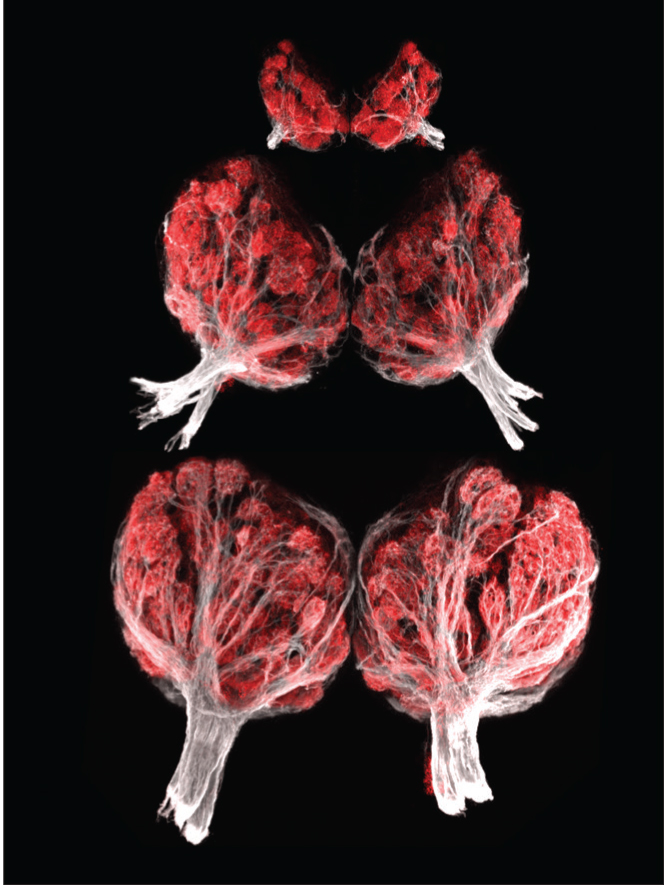

Braubach, et al. The Journal of Neuroscience, 2013.

The olfactory bulb is a structure in the forebrain responsible for processing smell information. This series of images shows the olfactory bulbs from a zebrafish at three stages of development.

From Molecules to Taste

Our ability to taste foods depends on the molecules set free when we chew or drink. These molecules are detected by taste (or gustatory) cells within taste buds located on the tongue and along the roof and back of the mouth. We have between 5,000 and 10,000 taste buds but start to lose them around age 50. Each taste bud consists of 50 to 100 sensory cells that are receptive to one of at least five basic taste qualities: sweet, sour, salty, bitter, and umami (Japanese for “savory”). Contrary to common belief, all tastes are detected across the tongue and are not limited to specific regions. When taste receptor cells are stimulated, they send signals through three cranial nerves — the facial, glossopharyngeal, and vagus nerves — to taste regions in the brainstem. The impulses are then routed through the thalamus to the gustatory cortex in the frontal lobe, and insula where specific taste perceptions are identified.

Our ability to taste foods depends on the molecules set free when we chew or drink. These molecules are detected by taste (or gustatory) cells within taste buds located on the tongue and along the roof and back of the mouth. We have between 5,000 and 10,000 taste buds but start to lose them around age 50. Each taste bud consists of 50 to 100 sensory cells that are receptive to one of at least five basic taste qualities: sweet, sour, salty, bitter, and umami (Japanese for “savory”). Contrary to common belief, all tastes are detected across the tongue and are not limited to specific regions. When taste receptor cells are stimulated, they send signals through three cranial nerves — the facial, glossopharyngeal, and vagus nerves — to taste regions in the brainstem. The impulses are then routed through the thalamus to the gustatory cortex in the frontal lobe, and insula where specific taste perceptions are identified.

From Molecules to Smell

Odors enter the nose on air currents and bind to specialized olfactory cells on a small patch of mucus membrane high inside the nasal cavity. Axons of these sensory neurons enter the two olfactory bulbs (one for each nostril) after crossing through tiny holes in the skull. From there, the information travels to the olfactory cortex. Smell is the only sensory system that sends sensory information directly to the cerebral cortex without first passing through the thalamus.

Odors enter the nose on air currents and bind to specialized olfactory cells on a small patch of mucus membrane high inside the nasal cavity. Axons of these sensory neurons enter the two olfactory bulbs (one for each nostril) after crossing through tiny holes in the skull. From there, the information travels to the olfactory cortex. Smell is the only sensory system that sends sensory information directly to the cerebral cortex without first passing through the thalamus.

We have around 1,000 different types of olfactory cells, but can identify about 20 times as many smells. The tips of olfactory cells are equipped with several hair-like cilia that are receptive to a number of different odor molecules, and many cells respond to the same molecules. A specific smell will therefore stimulate a unique combination of olfactory cells, creating a distinct activity pattern. This “signature” pattern of activity is then transmitted to the olfactory bulb and on to the primary olfactory cortex located on the anterior surface of the temporal lobe. Olfactory information then passes to nearby brain areas, where odor and taste information are mixed, creating the perception of flavor. Recent research suggests that people can identify odors as quickly as 110 milliseconds after their first sniff. Interestingly, the size of the olfactory bulbs and the way neurons are organized can change over time. As mentioned above, the olfactory bulbs in rodents and primates (including humans) are one of the few brain regions able to generate new neurons (neurogenesis) throughout life.

Combining Taste and Smell

Taste and smell are separate senses with their own receptor organs. Yet, we notice their close relationship when our nose is stuffed up by a cold and everything we eat tastes bland. It seems like our sense of taste no longer works, but the actual problem is that we detect only the taste, not taste and smell combined. Taste sense itself is rather crude, distinguishing only five basic taste qualities, but our sense of smell adds great complexity to the flavors we perceive. Human studies have shown that taste perceptions are particularly enhanced when people are exposed to matching combinations of familiar tastes and smells. For example, sugar tastes sweeter when combined with the smell of strawberries, than when paired with the smell of peanut butter or no odor at all. Taste and smell information appear to converge in several central regions of the brain. There are also neurons in the inferior frontal lobe that respond selectively to specific taste and smell combinations.

Some of our sensitivity to taste and smell is lost as we age, most likely because damaged receptors and sensory neurons are no longer replaced by new ones. Current research is getting closer to understanding how stem cells give rise to the neurons that mediate smell or taste. With this knowledge, stem cell therapies might one day be used to restore taste or smell to those who have lost it.

TOUCH AND PAIN

The somatosensory system is responsible for all the touch sensations we feel. These can include light touch, pressure, vibration, temperature, texture, itch, and pain. We perceive these sensations with various types of touch receptors whose nerve endings are located in different layers of our skin, the body’s main sense organ for touch. In hairy skin areas, some particularly sensitive nerve cell endings wrap around the bases of hairs, responding to even the slightest hair movement.

The somatosensory system is responsible for all the touch sensations we feel. These can include light touch, pressure, vibration, temperature, texture, itch, and pain. We perceive these sensations with various types of touch receptors whose nerve endings are located in different layers of our skin, the body’s main sense organ for touch. In hairy skin areas, some particularly sensitive nerve cell endings wrap around the bases of hairs, responding to even the slightest hair movement.

Signals from touch receptors travel along sensory nerve fibers that connect to neurons in the spinal cord. From there, the signals move upward to the thalamus and on to the somatosensory cortex, where they are translated into a touch perception. Some touch information travels quickly along myelinated nerve fibers with thick axons (A-beta fibers), but other information is transmitted more slowly along thin, unmyelinated axons (C fibers).

Cortical Maps and Sensitivity to Touch

Somatosensory information from all parts of your body is spread onto the cortex in the form of a topographic map that curls around the brain like headphones. Very sensitive body areas like lips and fingertips stimulate much larger regions of the cortex than less sensitive parts of the body. The sensitivity of different body regions to tactile and painful stimuli depends largely on the number of receptors per unit area and the distance between them. In contrast to your lips and hands, which are the most sensitive to touch, touch receptors on your back are few and far apart, making your back much less sensitive.

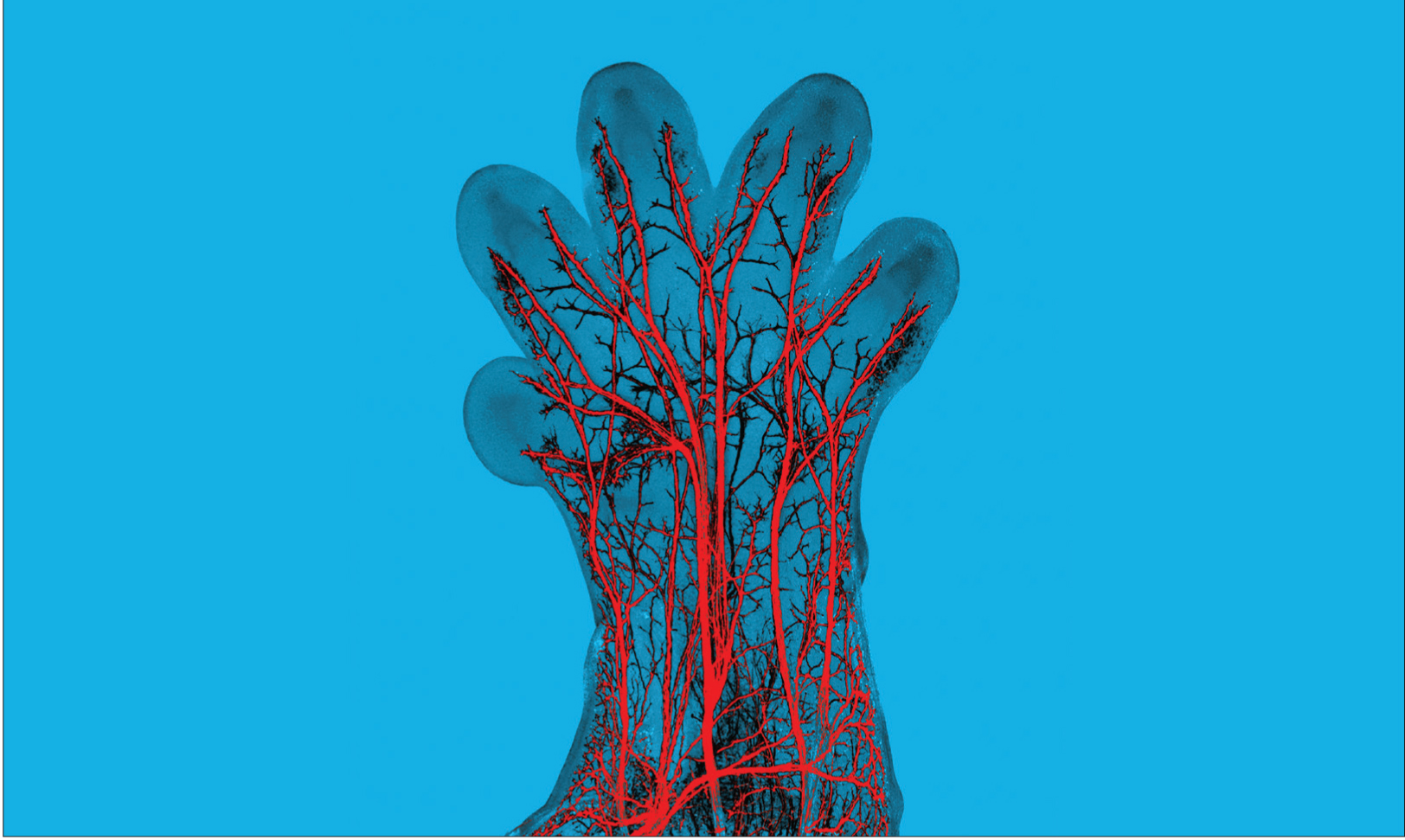

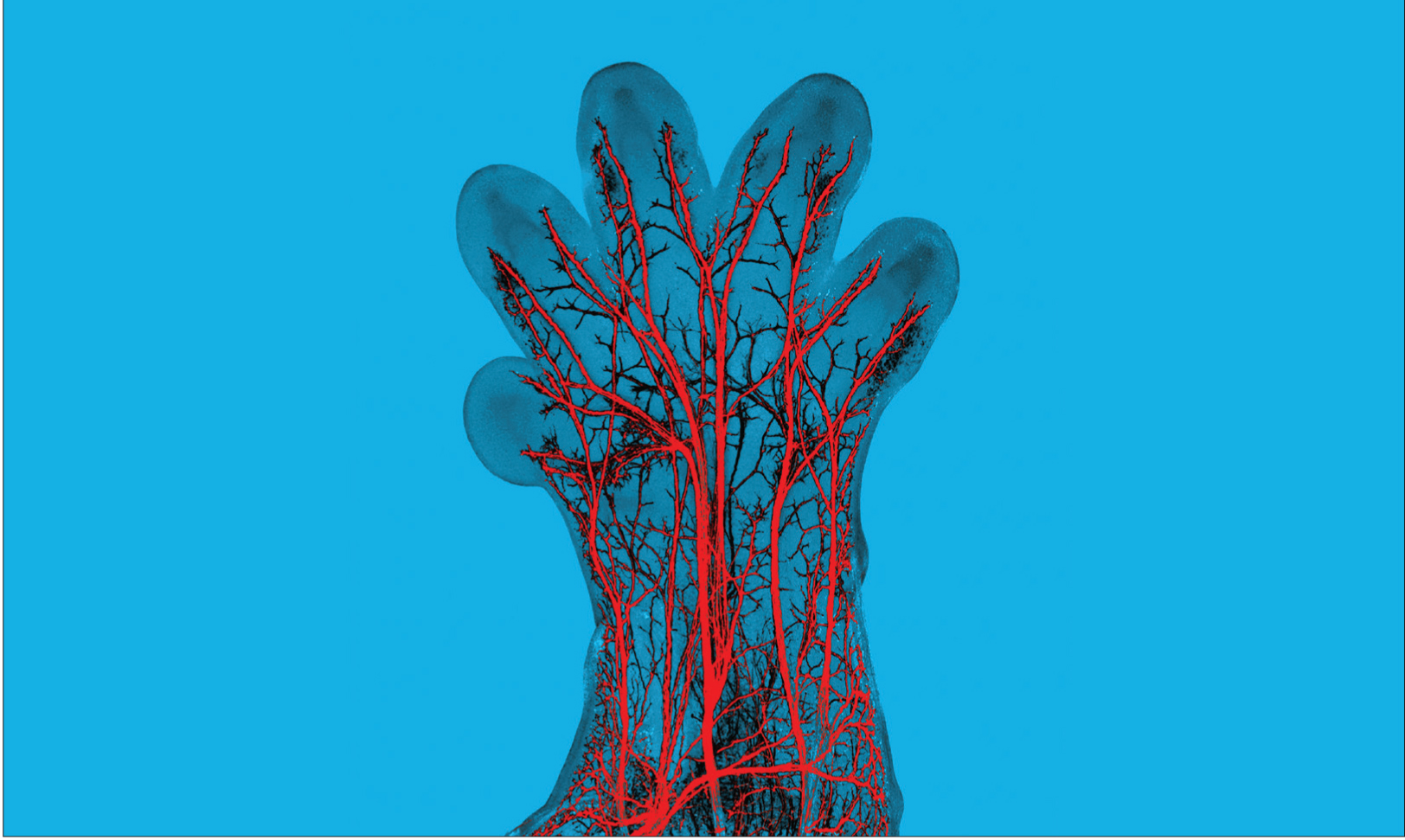

Hadjab, et al. The Journal of Neuroscience, 2013.

In this image, sensory nerve fibers, labeled in red, can be seen in the paw of a developing mouse embryo. These nerve fibers will become specialized to detect either pressure, pain, temperature, or itch.

Neurologists measure this sensitivity using two-point discrimination — the minimum distance between two points on the skin that a person can identify as distinct stimuli rather than a single one. Not surprisingly, acuity is greatest (and the two-point threshold is lowest) in the most densely nerve-packed areas of the body, like the fingers and lips. By contrast, you can distinguish two stimuli on your back only if they are several centimeters apart.

Pain and Itch Signals

Pain is both a sensory experience and an emotional experience. The sensory component signals tissue damage or the potential for damage, and the emotional component makes the experience unpleasant and distressing. Pain is primarily a warning signal — a way your brain tells itself that something is wrong with the body. Pain occurs when special sensory fibers, called nociceptors, respond to stimuli that can cause tissue damage. Normally, nociceptors respond only to strong or high-threshold stimuli. This response helps us detect when something is truly dangerous. Different types of nociceptors are sensitive to different types of painful stimuli, such as thermal (heat or cold), mechanical (wounds), or chemical (toxins or venoms). Interestingly, these same receptors also respond to chemicals in spicy food, like the capsaicin in hot peppers, which might produce a burning pain, depending on your sensitivity. Some types of nociceptors respond only to chemical stimuli that cause itch. A well-known example is histamine receptors that are activated when skin irritation, bug bites, and allergies trigger the release of histamine inside your body. But scientists have recently identified other itch-specific receptors as well.

Pain is both a sensory experience and an emotional experience. The sensory component signals tissue damage or the potential for damage, and the emotional component makes the experience unpleasant and distressing. Pain is primarily a warning signal — a way your brain tells itself that something is wrong with the body. Pain occurs when special sensory fibers, called nociceptors, respond to stimuli that can cause tissue damage. Normally, nociceptors respond only to strong or high-threshold stimuli. This response helps us detect when something is truly dangerous. Different types of nociceptors are sensitive to different types of painful stimuli, such as thermal (heat or cold), mechanical (wounds), or chemical (toxins or venoms). Interestingly, these same receptors also respond to chemicals in spicy food, like the capsaicin in hot peppers, which might produce a burning pain, depending on your sensitivity. Some types of nociceptors respond only to chemical stimuli that cause itch. A well-known example is histamine receptors that are activated when skin irritation, bug bites, and allergies trigger the release of histamine inside your body. But scientists have recently identified other itch-specific receptors as well.

When tissue injury occurs, it triggers the release of various chemicals at the site of damage, causing inflammation. This inflammatory “soup” then triggers nerve impulses that cause you to continue feeling pain, which helps you protect a damaged part of the body. Prostaglandins, for example, enhance the sensitivity of receptors to tissue damage, making you feel pain more intensely. They also contribute to a condition called allodynia, in which even soft touch can produce pain, as on badly sunburned skin. A long-lasting injury may lead to nervous system changes that enhance and prolong the perceived pain, even in the absence of pain stimuli. The resulting state of hypersensitivity to pain, called neuropathic pain, is caused by a malfunctioning nervous system rather than by an injury. An example of this condition is diabetic neuropathy, in which nerves in the hands or feet are damaged by prolonged exposure to high blood sugar and send signals of numbness, tingling, burning, or aching pain.

Sending and Receiving Messages

Pain and itch messages make their way to the spinal cord via small A-delta fibers and even smaller C fibers. The myelin sheath covering A-delta fibers helps nerve impulses travel faster, and these fibers evoke the immediate, sharp, and easily identified pain produced, for example, by a pinprick. The unmyelinated C fibers transmit pain messages more slowly; their nerve endings spread over a relatively large area and produce a dull and diffuse ache or pain sensation whose origin is harder to pinpoint. Pain and itch signals travel up the spinal cord through the brainstem and then to the thalamus (the ascending pathway). From there, they are relayed to several areas of the cerebral cortex that monitor the state of the body and transform pain and itch messages into conscious experience. Once aware, the brain has to opportunity to change how it responds to these messages.

Pain Management

Why do different people, when exposed to the same pain stimulus, experience the pain differently? How itchy or painful something feels obviously depends on the strength of the stimulus, but also on a person’s emotional state and the setting in which the injury occurs. When pain messages arrive in the cortex, the brain can process them in different ways. The cortex sends pain messages to a region of the brainstem called the periaqueductal gray matter. Through its connections with other brainstem nuclei, the periaqueductal gray matter activates descending pathways that modulate pain. These pathways also send messages to networks that release endorphins — opioids produced by the body that act like the analgesic morphine. Adrenaline produced in emotionally stressful situations like a car accident also works as an analgesic — a drug that relieves pain without a loss of consciousness. The body’s release of these chemicals helps regulate and reduce pain by intercepting the pain signals ascending in the spinal cord and brainstem.

Why do different people, when exposed to the same pain stimulus, experience the pain differently? How itchy or painful something feels obviously depends on the strength of the stimulus, but also on a person’s emotional state and the setting in which the injury occurs. When pain messages arrive in the cortex, the brain can process them in different ways. The cortex sends pain messages to a region of the brainstem called the periaqueductal gray matter. Through its connections with other brainstem nuclei, the periaqueductal gray matter activates descending pathways that modulate pain. These pathways also send messages to networks that release endorphins — opioids produced by the body that act like the analgesic morphine. Adrenaline produced in emotionally stressful situations like a car accident also works as an analgesic — a drug that relieves pain without a loss of consciousness. The body’s release of these chemicals helps regulate and reduce pain by intercepting the pain signals ascending in the spinal cord and brainstem.

Although these brain circuits exist in everyone, their efficacy and sensitivity will influence how much pain a person feels. They also explain why some people develop chronic pain that does not respond to regular treatment. Research shows that endorphins act at multiple types of opioid receptors in the brain and spinal cord, which has important implications for pain therapy, especially for people who suffer from intense chronic pain. For example, opioid drugs can now be delivered to the spinal cord before, during, and after surgery to reduce pain. And scientists are studying ways to electrically stimulate the spinal cord to relieve pain while avoiding the potentially harmful effects of long-term opioid use. Variations in people’s perceptions of pain also suggest avenues of research for treatments that are tailored to individual patients.

It is now clear that no single brain area is responsible for the perception of pain and itch. Emotional and sensory components create a mosaic of activity that influences how we perceive pain. In fact, some treatment methods — such as meditation, hypnosis, massages, cognitive behavioral therapy, and the controlled use of cannabis — have successfully targeted the emotional component rather than stopping the painful stimulus itself. Patients with chronic pain still feel the pain, but it no longer “hurts” as much. We don’t fully understand how these therapies work, but brain imaging tools have revealed that cannabis, for example, suppresses activity in only a few pain areas in the brain, primarily those that are part of the limbic system, the emotional center of the brain.

The retina is home to three types of neurons — photoreceptors, interneurons, and ganglion cells — which are organized into several layers. These cells communicate extensively with each other before sending information along to the brain. Counterintuitively, the light-sensitive photoreceptors — rods and cones — are located in the most peripheral layer of the retina. This means that after entering through the cornea and lens, light travels through the ganglion cells and interneurons before it reaches the photoreceptors. Ganglion cells and interneurons do not respond directly to light, but they process and relay information from the photoreceptors; the axons of ganglion cells exit the retina together, forming the optic nerve.

The retina is home to three types of neurons — photoreceptors, interneurons, and ganglion cells — which are organized into several layers. These cells communicate extensively with each other before sending information along to the brain. Counterintuitively, the light-sensitive photoreceptors — rods and cones — are located in the most peripheral layer of the retina. This means that after entering through the cornea and lens, light travels through the ganglion cells and interneurons before it reaches the photoreceptors. Ganglion cells and interneurons do not respond directly to light, but they process and relay information from the photoreceptors; the axons of ganglion cells exit the retina together, forming the optic nerve.